Let me show you how to upgrade an Oracle Database 19c non-CDB with Data Guard to Oracle AI Database 26ai.

- I reuse data files on the primary database.

- But restore the data files on the standby database after the migration (deferred recovery).

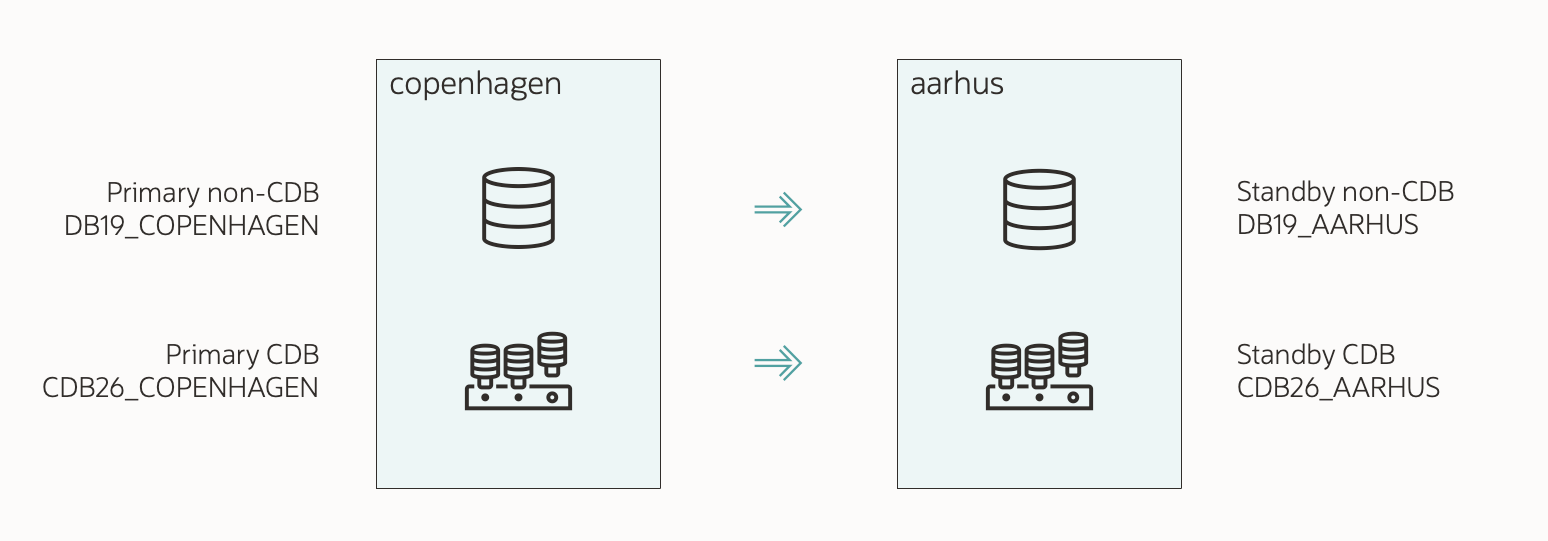

The demo environment:

- Two servers:

- COPENHAGEN (primary)

- AARHUS (standby)

- Source non-CDB:

- SID: DB19

- Primary unique name: DB19_COPENHAGEN

- Standby unique name: DB19_AARHUS

- Oracle home: /u01/app/oracle/product/dbhome_19_27

- Target CDB:

- SID: CDB26

- Primary unique name: CDB26_COPENHAGEN

- Standby unique name: CDB26_AARHUS

- Oracle home: /u01/app/oracle/product/dbhome_26_1

- Data Guard broker manages the Data Guard configuration.

- Oracle Restart (Grid Infrastructure) manages the databases.

You can still use the procedure if you don’t use Data Guard broker or Grid Infrastructure. Just be sure to change the commands accordingly.

1. Upgrade and Convert

I’ve already prepared my database and installed a new Oracle home. I’ve also created a new CDB or decided to use an existing one. The CDB is also configured for Data Guard.

The maintenance window has started, and users have left the database.

-

I ensure my standby database has received and applied all changes. Then, I stop it:

srvctl stop database -d DB19_AARHUS- I won’t need the non-CDB standby database anymore.

- For now, I shut it down and keep it just in case I need to roll back.

-

On the primary, I create an AutoUpgrade config file. I call it

upgrade26.cfg:global.global_log_dir=/home/oracle/autoupgrade/upgrade26 upg1.source_home=/u01/app/oracle/product/dbhome_19_27 upg1.target_home=/u01/app/oracle/product/dbhome_26_1 upg1.sid=DB19 upg1.target_cdb=CDB26 upg1.manage_standbys_clause=standbys=none upg1.export_rman_backup_for_noncdb_to_pdb=yessidcontains the name or SID of my database.- I specify the source and target Oracle homes. These are the Oracle homes of the source non-CDB and target CDB, respectively.

manage_standbys_clause=standbys=noneinstructs AutoUpgrade to plug the PDB in with deferred recovery.export_rman_backup_for_noncdb_to_pdbinstructs AutoUpgrade to export RMAN metadata usingDBMS_PDB.EXPORTRMANBACKUP. This makes it easier to use pre-plugin backups (see appendix).

-

On the primary host, I start AutoUpgrade to plug in, upgrade, and convert:

java -jar autoupgrade.jar -mode deploy -config upgrade26.cfg -

While the job progresses, I monitor it:

upg> lsj -a 30- The

-a 30option automatically refreshes the information every 30 seconds. - I can also use

status -job 100 -a 30to get detailed information about a specific job.

- The

-

When the upgrade completes, check the summary report for details:

cat /home/oracle/autoupgrade/upgrade26/cfgtoollogs/upgrade/auto/status/status.log -

The summary report lists the following details from the POSTCHECKS stage:

The following PDB(s) were created with standbys=none option. Refer to the postcheck result /home/oracle/autoupgrade/upgrade26/DB19/100/postchecks/db19_copenhagen_postupgrade.log for more details on manual actions needed. DB19

-

I find additional details in the referenced log file:

[action] Manual steps need to be performed after upgrade to copy the files to the standby database and enable recovery of the PDB from PRIMARY to STANDBY. Refer to MOS document Doc ID 1916648.1 for detailed steps. [broken rule] The following PDB(s) [DB19] were created with standbys=none option. [rule] On a Data Guard configuration, the CREATE PLUGGABLE DATABASE statement needs to be executed with clause STANDBYS=NONE to avoid impacting redo apply. That clause allows for deferral of file instantiation on the standby and the physical standby database to continue to protect existing pluggable databases. The clause allows the general structure of the PDB to be created on all physical standbys but all files belonging to the PDB are marked as OFFLINE/RECOVER at the standby.

- I deal with this issue in the next chapter.

2. Restore PDB on Standby

-

On the standby database, stop redo apply in the standby CDB using

dgmgrl:edit database CDB26_AARHUS set state='apply-off'; -

Use RMAN to connect to the standby CDB:

rman target sys@CDB26_AARHUS -

Restore the PDB and switch to the new data files:

run { allocate channel d1 device type disk; allocate channel d2 device type disk; set newname for pluggable database DB19 to new; restore pluggable database DB19 from service CDB26_COPENHAGEN; } -

Connect to the standby CDB and enable recovery of the PDB:

alter session set container=DB19; alter pluggable database enable recovery; -

Then, online all the data files in the PDB:

select file#, status from v$datafile; alter database datafile <file#> online; alter database datafile <file#> online; ... alter database datafile <file#> online; -

Restart redo apply in the standby CDB:

edit database CDB26_AARHUS set state='apply-on'; -

Connect to the standby CDB and verify the PDB’s recovery status (should be ENABLED). Ensure that the recovery process is running (should be APPLYING_LOG):

select recovery_status from v$pdbs where name='DB19'; select process, status, sequence# from v$managed_standby where process like 'MRP%'; -

Optionally, but strongly recommended, perform a switchover as the ultimate test. Connect to

dgmgrlusing username and password:dgmgrl sys as sysdba -

Perform the switchover:

validate database "CDB26_AARHUS"; switchover to "CDB26_AARHUS"; -

Then, finally, I connect to the new primary CDB, CDB26_AARHUS. I ensure the PDB is open in READ WRITE mode and unrestricted. I check the status of all data files is SYSTEM or ONLINE:

alter pluggable database DB19 open; alter session set container=DB19; select open_mode, restricted, recovery_status from v$pdbs; select name, status from v$datafile;

3. Post-upgrade Tasks

-

I take care of the post-upgrade tasks.

-

I update any profiles or scripts that use the database.

-

I remove the non-CDB entry from

/etc/orataband Grid Infrastructure on the standby. AutoUpgrade takes care of the primary. -

I clean up and remove the old source non-CDB. On both primary and standby, I remove: * Database files, like PFile, SPFile, password file, control file, and redo logs. * Database directory, like

diagnostic_dest,adump, ordb_recovery_file_dest. -

On the standby, I remove the source non-CDB data files using

asmcmd:cd DATA rm -rf DATA/DB19_AARHUS/DATAFILE- Important: I don’t do that on the primary because I reused the data files during the plug-in.

That’s It!

I’m using AutoUpgrade for the entire upgrade; nice and simple. I must take care of the standby database after the migration.

When I reuse the data files on the primary database, I no longer have the non-CDB for rollback. Be sure to plan accordingly.

Check the other blog posts related to upgrade to Oracle AI Database 26ai.

Happy upgrading!

Appendix

Data Files in ASM/OMF

Primary Database

-

When I create the PDB, I reuse the data files from the primary database. The data files are in the OMF location of the non-CDB database, e.g.:

+DATA/DB19_COPENHAGEN/DATAFILE -

However, after plug-in, the proper OMF location for my PDB data files is:

+DATA/CDB26_COPENHAGEN/<PDB GUID>/DATAFILE -

The CDB doesn’t care about this anomaly. However, if I want to conform to the OMF naming standard, I must move the data files. Find the data files:

select file#, name from v$datafile; -

I use online datafile move to move those files in the wrong location. I don’t specify a new data file name, so the database generates an OMF name:

alter database move datafile <file#>;- Online data file move creates a copy of the data files before switching to the new file and dropping the old one. So I need additional disk space, and the operation takes time while the database copies the file.

Standby Database

- There is nothing to do on the standby database. When I restore the data files, they are placed in the right OMF location.

Rollback Options

When you convert a non-CDB to a PDB, you can’t use Flashback Database as a rollback method. You need to rely on other methods, like:

- RMAN backups

- Storage snapshots

- Standby database (the source non-CDB standby database, which you leave behind)

- Refreshable clone PDB

What If I Have Multiple Standby Databases?

You must repeat the relevant steps for each standby database.

You can mix and match standbys with deferred and enabled recovery. Let’s say that you want to use enabled recovery on DR standbys and deferred recovery on the reporting standbys.

| Role | Name | Method |

|---|---|---|

| Local DR | CDB26_COPENHAGEN2 | Enabled recovery |

| Local reporting | CDB26_COPENHAGENREP | Deferred recovery |

| Remote DR | CDB26_AARHUS | Enabled recovery |

| Remote reporting | CDB26_AARHUSREP | Deferred recovery |

You would set the following in your AutoUpgrade config file:

upg1.manage_standbys_clause=standbys=CDB26_COPENHAGEN2,CDB26_AARHUS

You would need to merge the two procedures together. This is left as a reader’s exercise.

What If My Database Is A RAC Database?

There are no changes to the procedure if you have an Oracle RAC database. AutoUpgrade detects this and sets CLUSTER_DATABASE=FALSE at the appropriate time. It also removes the non-CDB from the Grid Infrastructure configuration.

Pre-plugin Backups

After converting a non-CDB to PDB, you can restore a PDB using a combination of backups from before and after the plug-in operation. Backups from before the plug-in is called pre-plugin backups.

A restore using pre-plugin backups is more complicated; however, AutoUpgrade eases that by exporting the RMAN backup metadata (config file parameter export_rman_backup_for_noncdb_to_pdb).

I suggest that you:

- Start a backup immediately after the upgrade, so you don’t have to use pre-plugin backups.

- Practice restoring with pre-plugin backups.

What If My Database Is Encrypted

AutoUpgrade fully supports upgrading an encrypted database. I can still use the above procedure with a few changes.

You’ll need to input the non-CDB database keystore password into the AutoUpgrade keystore. You can find the details in a previous blog post.

In the container database, AutoUpgrade always adds the database encryption keys to the unified keystore. After the conversion, you can switch to an isolated keystore.

Other Config File Parameters

The config file shown above is a basic one. Let me address some of the additional parameters you can use.

-

timezone_upg: AutoUpgrade upgrades the database time zone file after the actual upgrade. This requires an additional restart of the database and might take significant time if you have lots ofTIMESTAMP WITH TIME ZONEdata. If so, you can postpone the time zone file upgrade or perform it in a more time-efficient manner. -

before_action/after_action: Extend AutoUpgrade with your own functionality by using scripts before or after the job.