Get ready for the future and enjoy the many new cool features in Oracle Database 23ai. It is just an upgrade away.

This blog post gives you a quick overview of the upgrade to Oracle Database 23ai. Plus, it is the starting point of a whole series of blog posts with all the details you must know.

Things to Know

-

You can upgrade to Oracle Database 23ai if your database runs 19c or 21c. If you have an older database, you must first upgrade to 19c and then upgrade again to 23ai.

-

Oracle Database 23ai supports the multitenant architecture only. If your database is a non-CDB database, you must also convert it to a pluggable database as part of the upgrade.

-

Although the Multitenant Option requires a separate license, you can still run databases on the multitenant architecture without it. Oracle allows a certain number of pluggable databases in a container database without the Multitenant Option. Check the license guide for details.

-

Oracle Database 23ai is the next long-term support release. It means you can stay current with patches for many years. At the time of writing, patching ends in April 2032, but check Release Schedule of Current Database Releases (Doc ID 742060.1) for up-to-date information.

-

Since Oracle Database 23ai is a long-term support release, I recommend upgrading your production databases to this release. The innovation releases have a much shorter support period, so you will face the next upgrade sooner.

-

In Oracle Database 23ai, AutoUpgrade is the only recommended tool for upgrading your database. Oracle desupported the Database Upgrade Assistant (DBUA).

-

You can also use Data Pump or Transportable Tablespaces to migrate your data directly into an Oracle Database 23ai. Even if the source database runs on a lower release and in a non-CDB database. In fact, you can export from Oracle v5 and import directly into a 23ai PDB.

-

Check the Oracle Database Upgrade Quick Start Guide for a short introduction to database upgrades.

Important Things about Multitenant Migration

- The multitenant conversion is irreversible. Not even Flashback Database can help if you want to roll back. You must consider this when planning for a potential rollback.

- For smaller databases, you can rely on RMAN backups. However, for larger databases you might not be able to restore without the required time frame.

- For rollback, you can use a copy of the data files:

- The

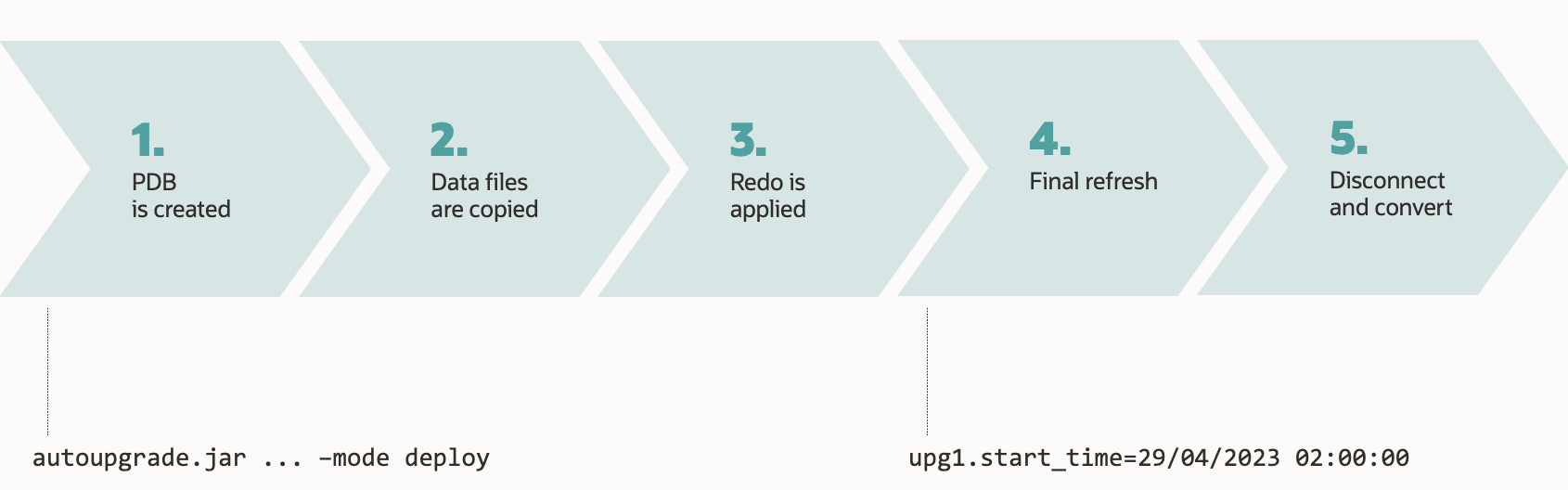

CREATE PLUGGABLE DATABASEstatement has aCOPYclause which copies the data files and uses the copies for the new PDB. - Refreshable clone PDBs can minimize the time needed to copy the data files by doing it advance and rolling forward with redo.

- Use image copies of your data files and roll forward with RMAN.

- Use a standby database for rollback.

- Storage snapshots

- The

- Depending on your Data Guard configuration, the plug-in operation needs special attention on your standby databases. If you have standby databases, be very thorough and test the procedure properly.

- In the worst case, you can break your standby databases without knowing it. Be sure to check your standby databases at the end of the migration. I recommend performing a switchover to be sure.

- The multitenant conversion requires additional downtime. Normally, I’d say around 10-20 minutes of additional downtime. But if you have Data Guard and must fix your standby databases within the maintenance window, then you need even more time.

How to

Over the coming weeks, I will publish several blog posts with step-by-step instructions.

Non-CDB

-

Upgrade Oracle Database 19c Non-CDB to 23ai and Convert to PDB

-

Upgrade Oracle Database 19c Non-CDB to 23ai and Convert to PDB Using Refreshable Clone PDBs

-

Upgrade Oracle Database 19c Non-CDB to 23ai and Convert to PDB with Data Guard and Re-using Data Files (Enabled Recovery)

-

Upgrade Oracle Database 19c Non-CDB to 23ai and Convert to PDB with Data Guard and Restoring Data Files (Deferred Recovery)

CDB

-

Upgrade Oracle Database 19c/21c CDB to 23ai

-

Upgrade Oracle Database 19c/21c CDB to 23ai with Data Guard

PDB

-

Upgrade Oracle Database 19c/21c PDB to 23ai

-

Upgrade Oracle Database 19c/21c PDB to 23ai with Data Guard using Refreshable Clone PDBs

-

Upgrade Oracle Database 19c/21c PDB to 23ai with Data Guard and Re-using Data Files (Enabled Recovery)

-

Upgrade Oracle Database 19c/21c PDB to 23ai with Data Guard and Restoring Data Files (Deferred Recovery)

OCI

- Upgrade Oracle Base Database Service to Oracle Database 23ai