I always fear the worst when I get a TNS error. It’s not my expertise. A TNS error was exactly what I got while I configured a Data Guard environment. Redo Transport didn’t work; the redo logs never made it to the standby database.

The Error

I took a look in the alert log on the primary database and found this error:

2022-05-10T08:25:28.739917+00:00

"alert_SALES2.log" 5136L, 255034C

TCP/IP NT Protocol Adapter for Linux: Version 12.2.0.1.0 - Production

Time: 10-MAY-2022 18:09:02

Tracing not turned on.

Tns error struct:

ns main err code: 12650

TNS-12650: No common encryption or data integrity algorithm

ns secondary err code: 0

nt main err code: 0

nt secondary err code: 0

nt OS err code: 0

A little further in the alert log, I found proof that the primary database could not connect to the standby database:

2022-05-10T18:09:02.991061+00:00

Error 12650 received logging on to the standby

TT04: Attempting destination LOG_ARCHIVE_DEST_2 network reconnect (12650)

TT04: Destination LOG_ARCHIVE_DEST_2 network reconnect abandoned

2022-05-10T18:09:02.991482+00:00

Errors in file /u01/app/oracle/diag/rdbms/sales2_fra3cx/SALES2/trace/SALES2_tt04_75629.trc:

ORA-12650: No common encryption or data integrity algorithm

Error 12650 for archive log file 1 to '...'

The Investigation

As always, Google it! Although I have used DuckDuckGo for privacy reasons instead of Google for many years, I still say google it, which is fairly annoying.

The search revealed this MOS note: ORA-12650: No Common Encryption Or Data Integrity Algorithm When Using SQLNET.CRYPTO_CHECKSUM_TYPES_SERVER=sha256 (Doc ID 2396891.1) Although it is fairly old, it led me to look for issues with data integrity checks defined in sqlnet.ora.

The primary database had the following defined in sqlnet.ora:

SQLNET.CRYPTO_CHECKSUM_SERVER=REQUIRED

SQLNET.CRYPTO_CHECKSUM_TYPES_SERVER=(SHA1)

SQLNET.CRYPTO_CHECKSUM_CLIENT=REQUIRED

SQLNET.CRYPTO_CHECKSUM_TYPES_CLIENT=(SHA1)

The above means that any connection made to or from this database must use data integrity checks. CRYPTO_CHECKSUM_SERVER and CRYPTO_CHECKSUM_CLIENT defines that. Also, the database will only accept connections using the SHA1 algorithm.

Then I looked in sqlnet.ora on the standby database:

SQLNET.CRYPTO_CHECKSUM_CLIENT=ACCEPTED

SQLNET.CRYPTO_CHECKSUM_SERVER=ACCEPTED

SQLNET.CRYPTO_CHECKSUM_TYPES_CLIENT=(SHA256,SHA384,SHA512,SHA1)

SQLNET.CRYPTO_CHECKSUM_TYPES_SERVER=(SHA256,SHA384,SHA512)

This database does not require data integrity checks. But if the other party requests or requires it, then the server is fine with it. That’s the meaning of ACCEPTED. But look at the allowed algorithms. When acting as server (i.e. receiving connections from someone else), it does not allow SHA1 algorithm, the only one allowed by the counterpart.

The Solution

I decided to remove all instances of SHA1 because:

- It is an old algorithm

- Any 12c database or client supports newer algorithms

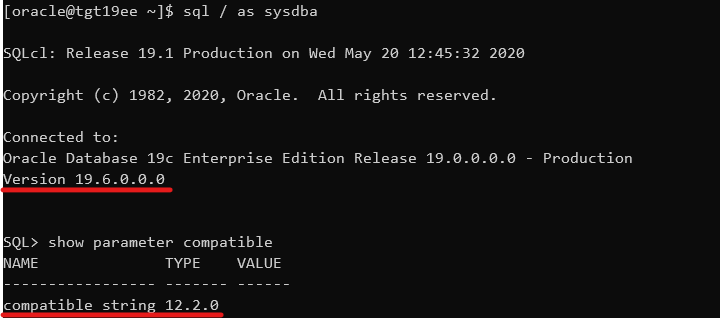

- In this environment, I don’t have any old 11g servers or clients

I added all the SHA-2 algorithms as supported algorithms. Now, sqlnet.ora in both databases look like this:

SQLNET.CRYPTO_CHECKSUM_TYPES_CLIENT=(SHA256,SHA384,SHA512)

SQLNET.CRYPTO_CHECKSUM_TYPES_SERVER=(SHA256,SHA384,SHA512)

This solved the problem and now redo transport worked fine.

If I wanted to go maximum security, I should allow only the SHA512 algorithm in both sqlnet.ora files:

SQLNET.CRYPTO_CHECKSUM_TYPES_CLIENT=(SHA512)

SQLNET.CRYPTO_CHECKSUM_TYPES_SERVER=(SHA512)

And force both databases to always use data integrity checks:

SQLNET.CRYPTO_CHECKSUM_SERVER=REQUIRED

SQLNET.CRYPTO_CHECKSUM_CLIENT=REQUIRED

Security

Some questions I asked myself while reading the Security Guide 19c.

Why do you want data integrity checks in our connections?

To protect against two types of attack:

- Data modification attack An unauthorized party intercepting data in transit, altering it, and retransmitting it is a data modification attack. For example, intercepting a $100 bank deposit, changing the amount to $10,000, and retransmitting the higher amount is a data modification attack.

- Replay attack Repetitively retransmitting an entire set of valid data is a replay attack, such as intercepting a $100 bank withdrawal and retransmitting it ten times, thereby receiving $1,000.

Can I do more to strengthen security in sqlnet.ora?

Yes. You should definitely also take a look at network encryption to protect data-in-transit. Take a look at Configuring Oracle Database Native Network Encryption and Data Integrity in the Security Guide 19c. These four parameters are of interest:

- SQLNET.ENCRYPTION_CLIENT

- SQLNET.ENCRYPTION_SERVER

- SQLNET.ENCRYPTION_TYPES_CLIENT

- SQLNET.ENCRYPTION_TYPES_SERVER

Also, reading Securing the Oracle Database – A technical primer can inspire you.

What’s wrong with SHA-1?

It’s old and has been made insecure by computer evolution. From Wikipedia:

In cryptography, SHA-1 (Secure Hash Algorithm 1) is a cryptographically broken but still widely used hash function which takes an input and produces a 160-bit (20-byte) hash value known as a message digest – typically rendered as a hexadecimal number, 40 digits long. It was designed by the United States National Security Agency, and is a U.S. Federal Information Processing Standard.

Since 2005, SHA-1 has not been considered secure against well-funded opponents; as of 2010 many organizations have recommended its replacement. NIST formally deprecated use of SHA-1 in 2011 and disallowed its use for digital signatures in 2013. As of 2020, chosen-prefix attacks against SHA-1 are practical. As such, it is recommended to remove SHA-1 from products as soon as possible and instead use SHA-2 or SHA-3. Replacing SHA-1 is urgent where it is used for digital signatures.