As discussed previously, the Release Updates rarely include any Data Pump fixes. If you start a Data Pump job, the absence of the many fixes may lead to errors or severe performance problems.

Hence, I always recommend that you install the Data Pump bundle patch before starting a Data Pump job.

But the fixes are not part of the Release Update and they are not RAC rolling installable, so it sounds like a pain to install, right? But it is not!

The Data Pump bundle patch is a Non-Binary Online Installable patch, so you can apply it without any downtime.

How To Apply Data Pump Bundle Patch

- I start with a running Oracle Database called FTEX.

ps -ef | grep pmon oracle 53316 1 0 13:57 ? 00:00:00 ora_pmon_FTEX - I verify that the Oracle home does not have the Data Pump bundle patch:

$ORACLE_HOME/OPatch/opatch lspatches 37499406;OJVM RELEASE UPDATE: 19.27.0.0.250415 (37499406) 37654975;OCW RELEASE UPDATE 19.27.0.0.0 (37654975) 37642901;Database Release Update : 19.27.0.0.250415 (37642901) OPatch succeeded. - I ensure that no Data Pump jobs are running currently.

- I patch my database.

$ORACLE_HOME/OPatch/opatch apply [output truncated] Verifying environment and performing prerequisite checks... OPatch continues with these patches: 37777295 Do you want to proceed? [y|n] y User Responded with: Y All checks passed. Backing up files... Applying interim patch '37777295' to OH '/u01/app/oracle/product/19_27' Patching component oracle.rdbms.dbscripts, 19.0.0.0.0... Patching component oracle.rdbms, 19.0.0.0.0... Patch 37777295 successfully applied. Log file location: /u01/app/oracle/product/19_27/cfgtoollogs/opatch/opatch2025-06-23_14-26-49PM_1.log OPatch succeeded.- Although the FTEX database is still running, OPatch doesn’t complain about files in use.

- This is because the Data Pump bundle patch is marked as a non-binary online installable patch.

- I can safely apply the patch to a running Oracle home – as long as no Data Pump jobs are running.

- For cases like this, it’s perfectly fine to use in-place patching.

- I complete patching by running Datapatch:

$ORACLE_HOME/OPatch/datapatch [output truncated] Adding patches to installation queue and performing prereq checks...done Installation queue: No interim patches need to be rolled back No release update patches need to be installed The following interim patches will be applied: 37777295 (DATAPUMP BUNDLE PATCH 19.27.0.0.0) Installing patches... Patch installation complete. Total patches installed: 1 Validating logfiles...done Patch 37777295 apply: SUCCESS logfile: /u01/app/oracle/cfgtoollogs/sqlpatch/37777295/27238855/37777295_apply_FTEX_2025Jun24_09_10_15.log (no errors) SQL Patching tool complete on Tue Jun 24 09:12:19 2025

That’s it! I can now enjoy the many benefits of the Data Pump bundle patch – without any downtime for a single instance database.

Happy patching!

Appendix

What about Oracle RAC Database

Although the Data Pump Bundle Patch is not a RAC Rolling Installable patch, you can still apply it to an Oracle RAC Database following the same approach above.

Simply apply the patch in turn on all nodes in your cluster. You should use OPatch with the -local option and not OPatchAuto. When all nodes are patched, you can run Datapatch.

Is It the Same as an Online or Hot Patch

No, a Non-Binary Online Installable patch is not the same as an Online or Hot patch.

A patch that only affects SQL scripts, PL/SQL, view definitions and XSL style sheets (i.e. non-binary components). This is different than an Online Patch, which can change binary files. Since it does not touch binaries, it can be installed while the database instance is running, provided the component it affects is not in use at the time. Unlike an Online Patch, it does not require later patching with an offline patch at the next maintenance period.

Source: Data Pump Recommended Proactive Patches For 19.10 and Above(Doc ID 2819284.1)

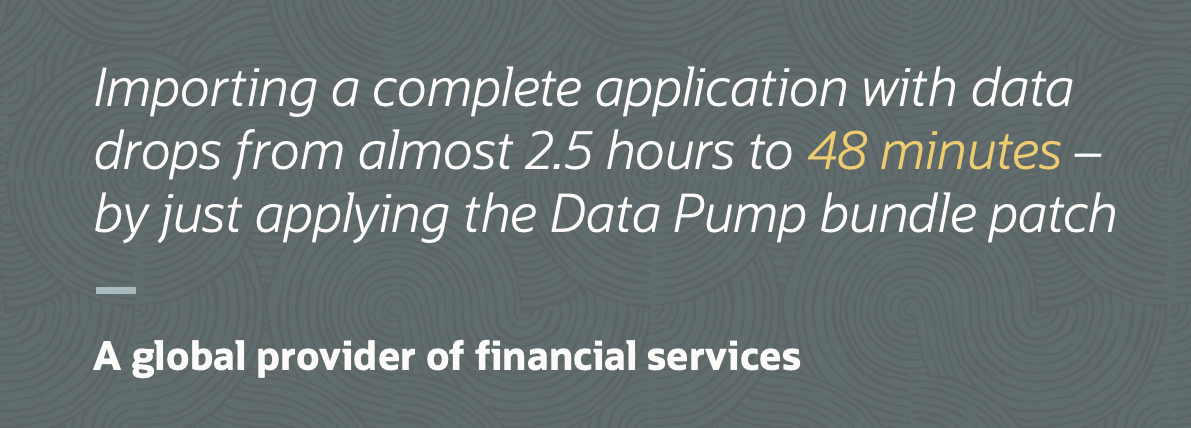

Is the Data Pump Bundle Patch Really That Important

Yes. There are more than 200 fixes for Data Pump. If you start a Data Pump job without it, you might face errors or severe performance issues.

Here’s a statement from a customer, I worked with.

We run our dbs on Windows platform and do in place updates (Although I do know the recommended is not in place…)My question is the current data pump patch is once again not available for Windows, and as you mentioned 2 releases ago I was forced rollback the 19.26 patch. My question do I need to request a patch be made available for the Windows environment?

LikeLike

Hi,

We build the Data Pump bundle patch for every Release Update on all platforms, including Windows. You don’t have to request it.

Our aim is to have the bundle patch ready with the release of the RU or as soon as possible thereafter. I see that the Windows 19.28 Release Update is scheduled for release on:

July 30, 2025 (ref. Critical Patch Update (CPU) Program Jul 2025 Patch Availability Document (DB-only) (Doc ID 3086459.1))

It shouldn’t take long after that until the bundle patch is ready.

Regards,

Daniel

LikeLike

We run our dbs on Windows platform and do in place updates (Although I do know the recommended is not in place…)My question is the current data pump patch is once again not available for Windows, and as you mentioned 2 releases ago I was forced rollback the 19.26 patch. My question do I need to request a patch be made available for the Windows environment?

LikeLike

Hi

Since the DP Bundle is RU-specific, it must be rolled back before applying the next RU, followed by the matching bundle. When running datapatch post DP on a CDB with 300 PDBs, it becomes challenging to restrict DP usage due to the lengthy application time for all PDBs. Additionally, the rollback/apply of DP bundle significantly increase CPU usage.

Do you have a solution to address this issue?

Thanks

LikeLike

Hi Ned,

Only if you use in-place patching, do you need to roll back the patch using opatch.

With out-of-place patching you just prepare the new Oracle home with the bundle patch. When you restart in the new Oracle home, Datapatch will roll off the patch from the database only before applying the new one. For 300 PDBs that will take longer, obviously, then with just one PDB. This is perhaps one of the downsides of the “all-in-one” concept in multitenant. I have a few suggestions:

* You can prioritize PDBs so the most important PDBs are patched first (ALTER DATABASE … PRIORITY).

* You can split Datapatch patching into chunks to have better control over the more important PDBs (./datapatch -pdbs).

* You can switch to unplug-plug patching of individual PDBs. Prepare a new CDB on the new patch level and more PDBs over there to complete patching faster (of the individual PDB, at the expense of doing more operations yourself).

Otherwise, we’d need to see the log files from Datapatch to see if there’s something that could be optimized.

Regards,

Daniel

LikeLike

We do use out-of-place patching. I was referring specifically to the datapatch rollback DP “roll off” and the application of the new DP bundle. This process is very CPU intensive. Why is it not optimized? Shouldn’t PDBs use shared objects with the CDB?

LikeLike

Hi,

Good to hear that you’re using out-of-place patching.

If you believe there’s something in the process that doesn’t work as intended, you can file an SR. Be sure to include the information mentioned in “SRDC – Data Collection for Datapatch issues (Doc ID 1965956.1)”. You can e-mail me the SR number and I can discuss with our team. My address is daniel.overby.hansen (a) oracle.com.

Regards,

Daniel

LikeLike

Hi.

I’ve encountered a problem related to the Data Pump Bundle Patch.

I updated a two-node RAC from 19.17 to 19.27. I performed this procedure by cloning the home directory:

/u01/app/19.17.0/grid/OPatch/opatchauto apply -phBaseDir /u01/sfw/PATCH/37591516 -prepare-clone -silent /u01/sfw/clone.properties -force_conflict -log /u01/sfw/prepareclone.logand then applying the patch with

switch-clone:/u01/app/19.17.0/grid/OPatch/opatchauto apply -switch-clone -log /u01/sfw/switchclone.logHowever, while the automatic data patch completes successfully, the patches are not applied to the PDB files. Therefore, I have to run

$ORACLE_HOME/OPatch/datapatchagain.I’m encountering the same errors mentioned in the note: ORACLE Datapatch Error (ORA-22866) Persists Despite Valid PDB Components and Objects in Dpload.sql (Doc ID 3006623.1)

As the note states, to avoid this error, they recommend updating the DtaPump patch to the version of the database.

Do you know how I could apply this patch so that when I run switch-clone, it doesn’t throw errors in the datapatch application?

I was wondering if it was possible to add it with an option in the switch-clone command.

LikeLike

Hi,

I’ve not heard of that problem before, but since there is a MOS note I guess you’re not the only one. However, I do see the MOS note is years old and still 19.17 was a fairly old Release Update. I’m wondering whether you will run into the same problem again when you patch from 19.27.

I don’t think there’s any option in “opatchauto” that will help you this way. Unfortunately. If it’s still a problem, I think it needs to be a manual task.

However, I’d be surprised if you do still see this error with a recent version of the Data Pump bundle patch.

Please let me know when you patch the next time.

Daniel

LikeLike