While doomscrolling on LinkedIn the other day, I came across an interesting post:

What is the best approach to take 65TB database backup before upgrade from 12c to 19c?

This post started a discussion with many good answers and ideas. Let me add my thoughts.

The Ideas

Cold Backup

Stop DB and take a cold backup.

- I don’t recommend this.

- A cold backup means downtime.

- An online backup works just as fine.

Don’t Make Any Preparations

You should be able to restore at any time within the timeframe specified by your business/SLA.

- You should always be prepared!

- If our SLA allows us to use four hours, why not make it faster?

- You could start an additional level 0 or level 1 backup before the maintenance window. That would reduce the number of archive logs, thereby reducing the time to restore.

Partial Offline Backup

Set app tablespace to read-only, then perform a partial offline backup (or a regular backup). In case of a restore, you need to restore less data.

- This is a special backup strategy that you normally don’t use. Be sure to test and practice it.

- A good solution for Standard Edition 2 databases, since you don’t have access to Flashback Database or parallel RMAN backups/restores.

- A viable approach, but not my favorite, since it’s a technique that you rarely use.

Data Guard

Just keep your standby not upgraded.

- This conflicts with the MAA guidelines that suggest keeping the standbys online and applying redo.

- But I like this approach. You need a little more time after the upgrade to go live, because the standby must catch up. But that shouldn’t take long, as an upgrade doesn’t generate that much redo.

- If you have multiple standbys, this technique becomes even more attractive. Have some standbys follow the primary (to go live faster) and a few lagging behind (in case you need to rollback).

Flashback Database

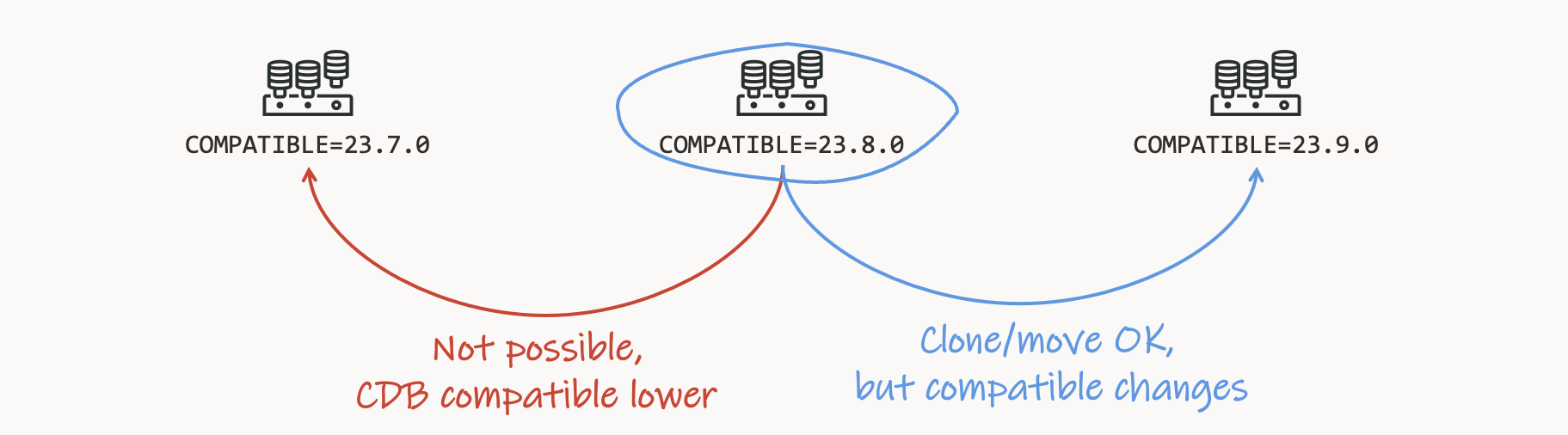

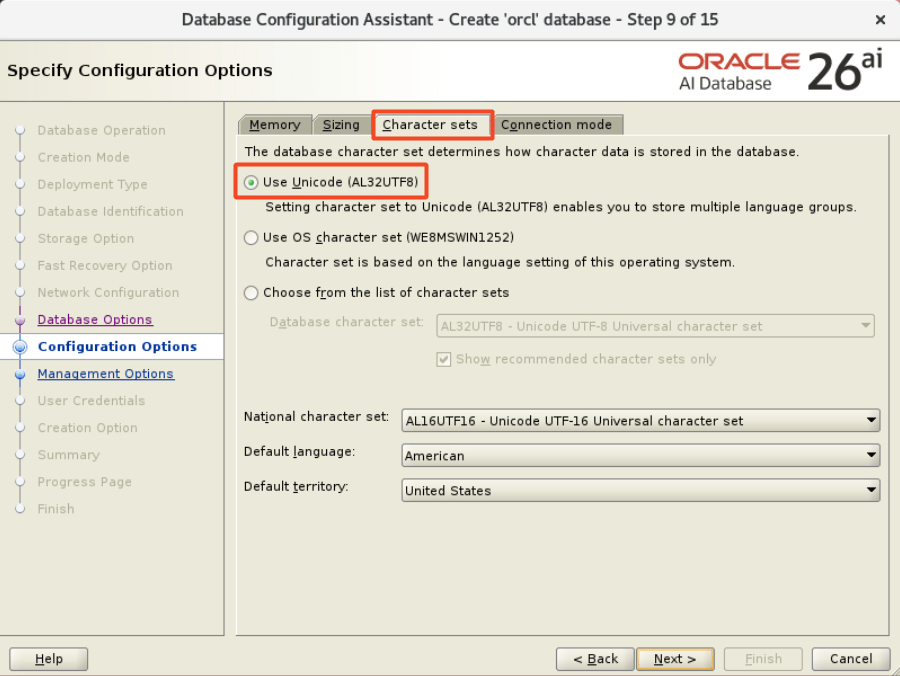

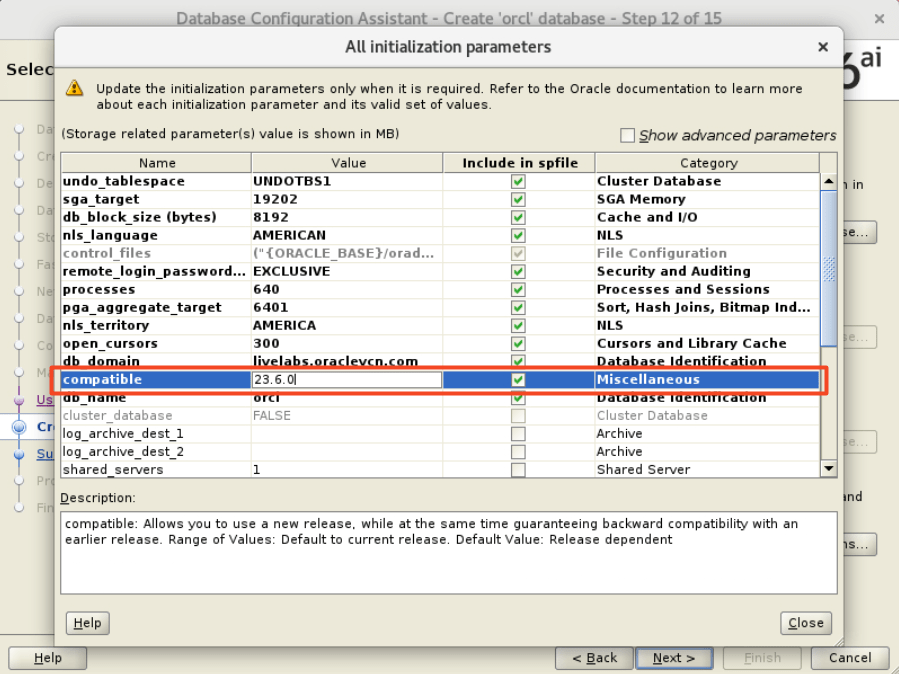

Or keep compatible at 12 and create a guaranteed restore point before the upgrade so you can flashback.

- Yes – my favorite.

- Flashback Database should be your first rollback option. It’s so fast and so convenient.

Another reply:

I just enable Flashback Database, create guaranteed restore point, and start the upgrade. After validation of application, drop the guarantee restore point.

- The answer suggests that Flashback Database was the only rollback option.

- As much as I love Flashback Database, don’t rely solely on it.

- It should be your first option, but not the only one.

- By the way, you don’t have to enable Flashback Database to create a guaranteed restore point. Without Flashback Database turned on, you can still revert back to a restore point.

Downgrade

Oracle Database also has downgrade method.

- Downgrade is a cool option. But it’s a fallback option – something you can use days or weeks later following a successful upgrade.

- If the upgrade crashes midway, you can’t use a downgrade to go back.

Data Pump

Export import is a good option.

- Export/import is not a rollback plan. Similar to downgrade, if the upgrade crashes midway, you won’t be able to use Data Pump.

- Days or weeks later, you can use Data Pump. But it might be a viable option for larger databases.

GoldenGate

Another approach can be use of GoldenGate

- This is an awesome way to upgrade.

- Especially if you go the extra mile and use active/active replication. Then, you can gradually move sessions to the new database and test things slowly.

- But are you willing to pay the price? Not only license fees, but also the added complexity.

What Does Oracle Say?

If you check the Upgrade Guide you will find one recommendation:

Perform a level 0 backup if time allows. Otherwise, at least a level 1 backup.

By doing a level 1 backup close to the maintenance window, then you can limit the amount of archive logs that you would need to apply on top of your level 0/1 backups. This can dramatically reduce the time it takes to restore.

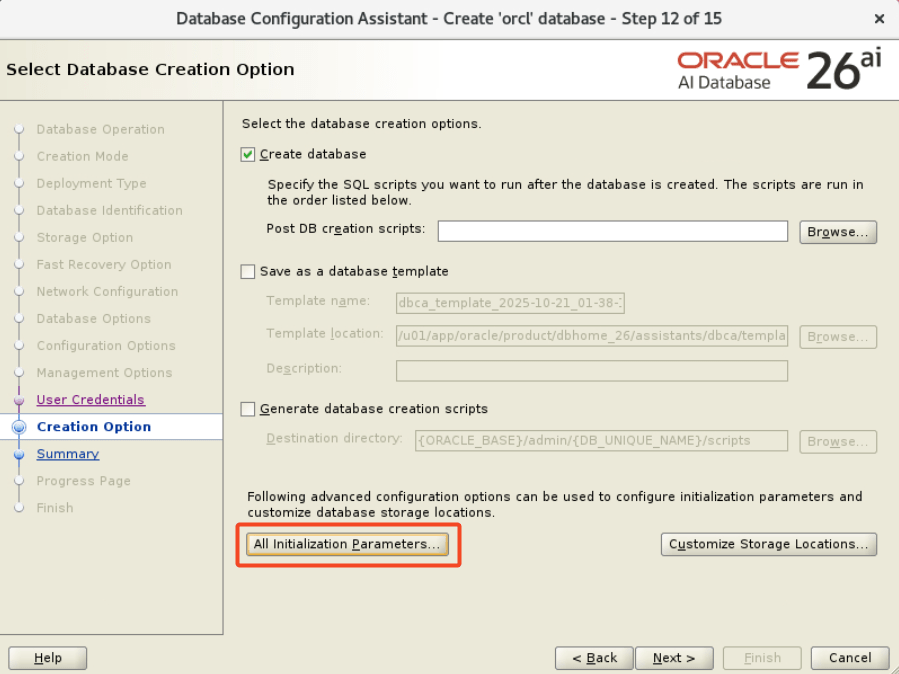

In addition, AutoUpgrade automatically creates a guaranteed restore point prior to the upgrade.

My Final Thoughts

This discussion on LinkedIn is a really good example that there’s no such thing as one-size-fits-all in tech. It always depends…

I recommend finding a solution that:

- You’re comfortable with.

- Fits your business requirements and SLA.

- You know work, and you gain that knowledge by testing it. You never know that it works until you test it.

- Doesn’t stand alone. You need multiple rollback options.

What are your thoughts? Which rollback options do you have? Leave a comment below.

Happy upgrading!