At a recent event, a customer asked me a question.

I’m doing a Data Pump import, and processing PROCACT_SCHEMA takes a lot of time. What’s going on?

I love meeting our customers and hearing about these cases. So, let’s dig into it.

Situation

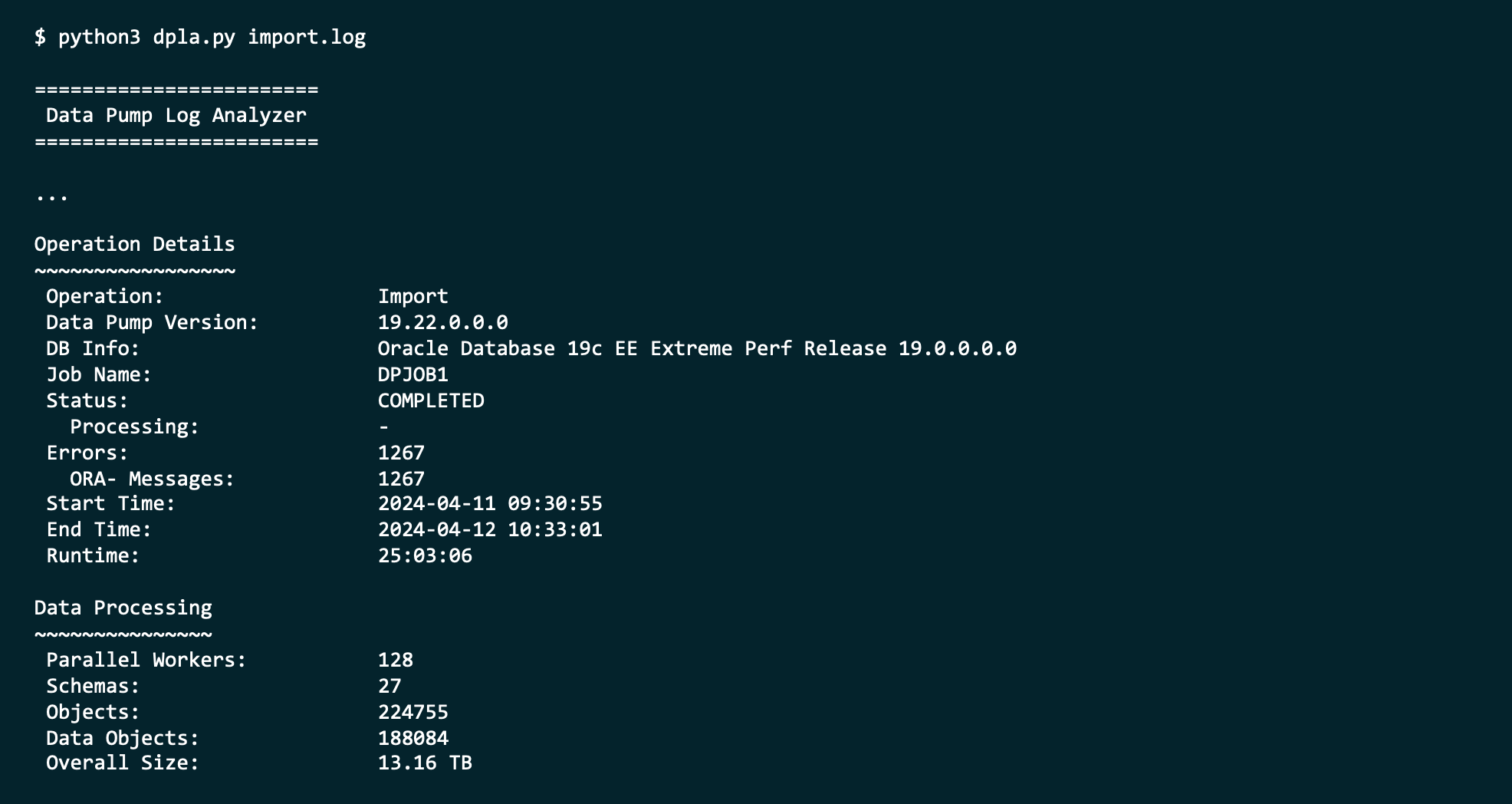

Here is an extract from the Data Pump import log:

08-SEP-24 18:10:18.604: W-4 Processing object type DATABASE_EXPORT/SCHEMA/PROCACT_SCHEMA

08-SEP-24 22:08:24.804: W-6 Completed 78693 PROCACT_SCHEMA objects in 14283 seconds

08-SEP-24 22:08:24.805: W-6 Completed by worker 1 78692 PROCACT_SCHEMA objects in 14283 seconds

08-SEP-24 22:08:24.826: W-6 Processing object type DATABASE_EXPORT/EARLY_POST_INSTANCE_IMPCALLOUT/MARKER

A few observations:

- Seeing a customer using

logtime=allandmetrics=yesin their Data Pump jobs is great. It adds valuable diagnostic information to the log file. - Almost four hours on PROCACT_SCHEMA.

- Almost 80.000 schemas – that’s a lot.

- Only one active worker.

Tracing

The customer sent me a 10046 trace for that period. They had the suspicion that Data Pump was doing something for each schema. I had the same thought, so I sorted the trace file:

sort CDB01_dw00_54345.trc > sort.txt

Using my favorite text editor, I could scroll through the content and find this repeating call:

sys.dbms_logrep_imp.instantiate_schema(schema_name=>SYS_CONTEXT('USERENV','CURRENT_SCHEMA'), export_db_name=>'...', inst_scn=>'...');COMMIT; END;

There’s not much public information about this package, but it is a package used during import to move logical replication settings – used by Oracle Streams and Oracle GoldenGate.

Oracle Streams

I’m not a big Streams expert, but I do know that it is desupported in Oracle Database 19c. But could there be some leftovers in the dictionary that caused Data Pump to move the configuration?

I asked the customer to query many of the DBA_STREAMS_* views in the database. One of them returned a lot of rows:

select count(*) from dba_streams_columns;

COUNT(*)

----------

72636

Solution

The customer asked for advice on using the Data Pump import parameter STREAMS_CONFIGURATION=NO. Judging from the parameter name, it sounds like a good idea, but the documentation reads:

… STREAMS_CONFIGURATION parameter specifies whether to import any GoldenGate Replication metadata that may be present in the export dump file.

But it states GoldenGate metadata – not Streams. But look in the 18c documentation:

… import any Streams metadata that may be present in the export dump file.

Streams and GoldenGate share a lot of the same architecture in the database because they serve the same purpose: Data replication. That’s why the parameter still exists even if Streams is desupported.

The customer didn’t use GoldenGate, so they decided to exclude the replication metadata during import:

impdp ... streams_configuration=no

The PROCACT_SCHEMA part of the import went from almost four hours to just 30 seconds!

Final Words

In this case, a full import, the issue happened during DATABASE_EXPORT/SCHEMA/PROCACT_SCHEMA. It could happen in a schema import as well. In that case, the phase would be SCHEMA_EXPORT/POST_SCHEMA/PROCACT_SCHEMA.

What is PROCACT? It is short for procedural actions. That phase handles things in the database that are not real objects nevertheless something you want to include. Data Pump calls out to other parts of the code to get the job done. That’s also why you typically see one worker active. I’d like to write a separate blog post about that one day.

A full export/import is convenient, but it includes everything, including the things you didn’t know you had in the database – leftovers or garbage data. A schema export/import is a cleaner approach but would still be affected by the above issue. If you’re short on time, consider a schema export/import and include just the objects that you really need. Something like:

impdp ... schemas=abc,def include=table,index,constraint,trigger,package