Let me show you how to upgrade an Oracle Database 19c non-CDB with Data Guard to Oracle AI Database 26ai.

- I reuse data files on the primary database.

- I also reuse the data files on the standby database (enabled recovery).

- This is a more complex produced compared to deferred recovery. However, the PDB is immediately protected by the standby after plug-in.

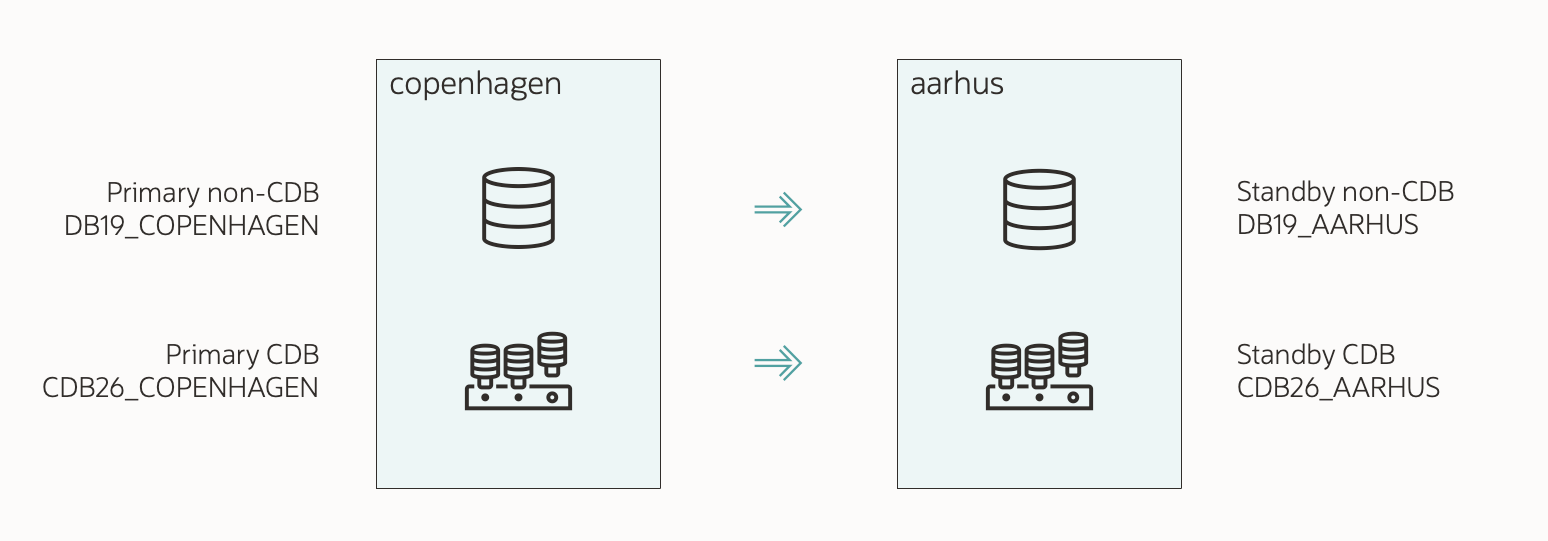

The demo environment:

- Two servers:

- COPENHAGEN (primary)

- AARHUS (standby)

- Source non-CDB:

- SID: DB19

- Primary unique name: DB19_COPENHAGEN

- Standby unique name: DB19_AARHUS

- Oracle home: /u01/app/oracle/product/dbhome_19_27

- Target CDB:

- SID: CDB26

- Primary unique name: CDB26_COPENHAGEN

- Standby unique name: CDB26_AARHUS

- Oracle home: /u01/app/oracle/product/dbhome_26_1

- Data Guard broker manages the Data Guard configuration.

- Oracle Restart (Grid Infrastructure) manages the databases.

You can still use the procedure if you don’t use Data Guard broker or Grid Infrastructure. Just be sure to change the commands accordingly.

1. Preparations On Primary

I’ve already prepared my database and installed a new Oracle home. I’ve also created a new CDB or decided to use an existing one. The CDB is also configured for Data Guard.

The maintenance window has started, and users have left the database.

- I create an AutoUpgrade config file. I call it

upgrade26.cfg:global.global_log_dir=/home/oracle/autoupgrade/upgrade26 upg1.source_home=/u01/app/oracle/product/dbhome_19_27 upg1.target_home=/u01/app/oracle/product/dbhome_26_1 upg1.sid=DB19 upg1.target_cdb=CDB26 upg1.manage_standbys_clause=standbys=all upg1.export_rman_backup_for_noncdb_to_pdb=yes- I specify the source and target Oracle homes. These are the Oracle homes of the source non-CDB and target CDB, respectively.

sidcontains the name of my database.target_cdbis the name of the CDB where I want to plug in.manage_standbys_clause=standbys=allinstructs AutoUpgrade to plug the PDB in with enabled recovery.export_rman_backup_for_noncdb_to_pdbinstructs AutoUpgrade to export RMAN metadata usingDBMS_PDB.EXPORTRMANBACKUP. This makes it easier to use pre-plugin backups (see appendix).

2. Preparations On Standby

My standby database uses ASM. This complicates the plug-in on the primary because the standby database doesn’t know where to find the data files.

I solve this with ASM aliases. This allows the standby CDB to find the data files from the standby non-CDB and reuse them.

-

On the standby, I connect to the non-CDB and get a list of all data files:

select name from v$datafile; -

I also find the GUID of my non-CDB:

select guid from v$containers;- The GUID doesn’t change in a Data Guard environment. It’s the same in all database.

-

Then I switch to the ASM instance on the standby host:

export ORACLE_SID=+ASM1 sqlplus / as sysasm -

Here, I create the directories for the OMF location of the future PDB (the non-CDB you are about to plug-in). I insert the GUID found above:

alter diskgroup data add directory '+DATA/CDB26_AARHUS/<GUID>'; alter diskgroup data add directory '+DATA/CDB26_AARHUS/<GUID>/DATAFILE';- Notice how the CDB database unique name (CDB26_AARHUS) is part of the path.

- During plug-in, the database keeps its GUID. It never changes.

-

For each data file in my source non-CDB (including undo, excluding temp files), I must create an ASM alias, for instance:

alter diskgroup data add alias '+DATA/CDB26_AARHUS/<GUID>/DATAFILE/users_273_1103046827' for '+DATA/DB19_AARHUS/DATAFILE/users.273.1103046827';- I must create an alias for each data file. I create the alias in the OMF location of the future PDB in the standby CDB.

- The alias must not contain dots/punctuation (.). That would be a violation of the OMF naming standard. Notice how I replaced those with an underscore.

- The alias points to the location of the data file in the standby non-CDB location.

- You can find a script to create the aliases in MOS note KB117147.

-

I stop redo apply in the standby non-CDB:

edit database 'DB19_AARHUS' set state='apply-off'; -

If my standby is open (Active Data Gurd), I restart in MOUNT:

srvctl stop db -d DB19_AARHUS -o immediate srvctl start db -d DB19_AARHUS -o mount

3. Upgrade Primary

-

On the primary, I start the upgrade:

java -jar autoupgrade.jar -mode deploy -config upgrade26.cfg- AutoUpgrade re-analyzes my non-CDB and executes the pre-upgrade fixups.

- Next, AutoUpgrade mounts the non-CDB and flushes redo to all standbys.

- Then, it opens the non-CDB in read-only, generates the manifest file and shuts down.

- Finally, AutoUpgrade stops the job.

-

AutoUpgrade prints the following message:

----------------- Continue with the manual steps ----------------- There is a job with manual steps pending. The checkpoint change number is 2723057 for database DB19. For the standby database <DB19_AARHUS>, use the checkpoint number <2723057> to recover the database. You can find the SCN information in: /home/oracle/autoupgrade/upgrade26/DB19/101/drain/scn.json Once these manual steps are completed, you can resume job 101 ------------------------------------------------------------------- At this point, all data files on the primary non-CDB is consistent at a specified SCN, 2723057.

- I must recover the standby data files to the same SCN.

-

On the standby host, I connect to the non-CDB:

alter database recover managed standby database until change 2723057;until changeis set to the SCN provided by AutoUpgrade.- The standby now recovers all data files to the exact same SCN as the primary. This is a requirement for re-using the data files on plug-in.

-

I verify that no data files are at a different SCN:

select file# from v$datafile_header where checkpoint_change# != 2723057;checkpoint_change#is the SCN specified by AutoUpgrade.- The query should return no rows.

-

I shut down the standby non-CDB and removes the database configuration:

srvctl stop database -d DB19_AARHUS -o immediate srvctl remove database -d DB19_AARHUS -noprompt- I remove the database from

/etc/oratabas well. - From this point, the standby non-CDB must not start again.

- The standby CDB will use the data files.

- I remove the database from

-

Back on the primary, in the AutoUpgrade console, I instruct AutoUpgrade to continue the plug-in, upgrade and conversion.

upg> resume -job 101- AutoUpgrade plugs the non-CDB into the CDB.

- The plug-in operation propagates via redo to the standby.

-

On the standby, I verify that the standby CDB found the data files on plug-in. I find the following in the alert log:

2025-12-18T16:08:28.772125+00:00 Recovery created pluggable database DB19 DB19(3):Recovery scanning directory +DATA/CDB26_AARHUS/<GUID>/DATAFILE for any matching files. DB19(3):Recovery created file +DATA/CDB26_AARHUS/<GUID>/DATAFILE/system_289_1220179503 DB19(3):Successfully added datafile 12 to media recovery DB19(3):Datafile #12: '+DATA/CDB26_AARHUS/<GUID>/DATAFILE/system_289_1220179503' DB19(3):Recovery created file +DATA/CDB26_AARHUS/<GUID>/DATAFILE/sysaux_291_1220179503 DB19(3):Successfully added datafile 13 to media recovery DB19(3):Datafile #13: '+DATA/CDB26_AARHUS/<GUID>/DATAFILE/sysaux_291_1220179503'- I can see that the standby CDB scans the OMF location (

+DATA/CDB26_AARHUS/<GUID>/DATAFILE) for the data files. - However, the data files are in the non-CDB location (

+DATA/DB19_AARHUS/DATAFILE). - For each of the data files, the standby finds the alias that I created, and plugs it with those.

- I can see that the standby CDB scans the OMF location (

-

Back on the primary, I wait for AutoUpgrade to complete the plug-in, upgrade and PDB conversion. In the end, AutoUpgrade prints the following:

Job 101 completed ------------------- Final Summary -------------------- Number of databases [ 1 ] Jobs finished [1] Jobs failed [0] Jobs restored [0] Jobs pending [0] Please check the summary report at: /home/oracle/autoupgrade/upgrade26/cfgtoollogs/upgrade/auto/status/status.html /home/oracle/autoupgrade/upgrade26/cfgtoollogs/upgrade/auto/status/status.log- The non-CDB (DB19) is now a PDB in my CDB (CDB26).

- AutoUpgrade removes the non-CDB (DB19) registration from

/etc/orataband Grid Infrastruction on the primary. I’ve already done that for the standby.

4. Check Data Guard

-

On the standby CDB, ensure that the PDB is MOUNTED and recovery status is ENABLED:

--Should be MOUNTED, ENABLED select open_mode, recovery_status from v$pdbs where name='DB19'; -

I also ensure that the recovery process is running:

--Should be APPLYING_LOG select process, status, sequence# from v$managed_standby where process like 'MRP%'; -

Next, I use Data Guard broker (

dgmgrl) to validate my configuration:validate database "CDB26_COPENHAGEN" validate database "CDB26_AARHUS"- Both databases must report that they are ready for switchover.

-

Optionally, but strongly recommended, I perform a switchover:

switchover to "CDB26_AARHUS" -

The former standby (CDB26_AARHUS) is now primary. I verify that my PDB opens:

alter pluggable database DB19 open; --Must return READ WRITE, ENABLED, NO select open_mode, recovery_status, restricted from v$pdbs where name='DB19';- If something went wrong previously in the process, the PDB won’t open.

5. Post-upgrade Tasks

-

I take care of the post-upgrade tasks.

-

I update any profiles or scripts that use the database.

-

I clean up and remove the old source non-CDB (DB19). On both primary and standby, I remove:

- Database files, like PFile, SPFile, password file, control file, and redo logs.

- Database directory, like

diagnostic_dest,adump, ordb_recovery_file_dest. - Since I reused the data files in my CDB, I don’t delete those.

Final Words

I’m using AutoUpgrade for the entire upgrade; nice and simple. AutoUpgrade helps me recover the non-CDB standby database, so I can reuse the data files on all database in my Data Guard config.

When I reuse the data files, I no longer have the non-CDB for rollback. Be sure to plan accordingly.

Check the other blog posts related to upgrade to Oracle AI Database 26ai.

Happy upgrading!

Appendix

What Happens If I Make a Mistake?

If the standby database fails to find the data files, the recovery process stops. Not only for the recently plugged-in PDBs, but for all PDBs in the CDB.

This is, of course, a critical situation!

- I ensure to perform the operation correctly and double-check afterward (and then check again).

- If I have Active Data Guard and PDB Recovery Isolation turned on, recovery stops only in the newly plugged-in PDB. The CDB continues to recover the other PDBs.

Data Files in ASM or OMF in Regular File System

Primary Database

-

When I create the PDB, I’m re-using the data files. The data files are in the OMF location of the non-CDB database, e.g.:

+DATA/DB19_COPENHAGEN/DATAFILE -

However, the proper OMF location for my PDB is:

+DATA/CDB26_COPENHAGEN/<PDB GUID>/DATAFILE -

The CDB doesn’t care about this anomaly. However, if I want to conform to the OMF naming standard, I must move the data files. Find the data files:

select file#, name from v$datafile; -

Use online datafile move to move those files in the wrong location. The database automatically generates a new OMF name:

alter database move datafile <file#>;- Online data file move creates a copy of the data files before switching to the new file and dropping the old one. So, I need additional disk space, and the operation takes time while the database copies the file.

Standby Database

The data files are in the wrong OMF location, like on the primary.

I can use online data file move on the standby database as well. However, I must stop redo apply and open the standby database to do that. If I don’t have a license for Active Data Guard, I must stop redo apply before opening the database.

-

Stop redo apply:

edit database 'CDB26_AARHUS' set state='apply-off'; -

Open the standby CDB and PDB:

alter database open; alter pluggable database DB19 open; -

Find the data files:

alter session set container=DB19; select file#, name from v$datafile; -

Move those data files that are in the wrong location. This command also removes the original file and the alias:

alter database move datafile <file#>; -

Restart the standby CDB in mount mode. If I have Active Data Guard, I leave the standby database in OPEN mode:

srvctl stop database -d CDB26_AARHUS srvctl start database -d CDB26_AARHUS -o mount -

Restart redo apply:

edit database 'CDB26_AARHUS' set state='apply-on';

There are two things to pay attention to:

- I must open the standby database. If redo apply is turned off, I can open the standby database with a license for Active Data Guard. Be sure not to violate your license.

- I must turn off redo apply while I perform the move. Redo transport is still active, so the standby database receives the redo logs. However, apply lag increases while I move the files. In case of a switchover or failover, I need more time to close the apply gap.

Rollback Options

When you convert a non-CDB to a PDB, you can’t use Flashback Database as a rollback method. You need to rely on other methods, like:

- RMAN backups

- Storage snapshots

- Additional standby database (another source non-CDB standby, which you leave behind)

- Refreshable clone PDB

What If I Have Multiple Standby Databases?

You must repeat the relevant steps for each standby database.

You can mix and match standbys with deferred and enabled recovery. Let’s say that you want to use enabled recovery on DR standbys and deferred recovery on the reporting standbys.

| Role | Name | Method |

|---|---|---|

| Local DR | CDB26_COPENHAGEN2 | Enabled recovery |

| Local reporting | CDB26_COPENHAGENREP | Deferred recovery |

| Remote DR | CDB26_AARHUS | Enabled recovery |

| Remote reporting | CDB26_AARHUSREP | Deferred recovery |

You would set the following in your AutoUpgrade config file:

upg1.manage_standbys_clause=standbys=CDB26_COPENHAGEN2,CDB26_AARHUS

You would need to merge the two procedures together. This is left as a reader’s exercise.

What If My Database Is A RAC Database?

There are no changes to the procedure if you have an Oracle RAC database. AutoUpgrade detects this and sets CLUSTER_DATABASE=FALSE at the appropriate time. It also removes the non-CDB from the Grid Infrastructure configuration.

Pre-plugin Backups

After converting a non-CDB to PDB, you can restore a PDB using a combination of backups from before and after the plug-in operation. Backups from before the plug-in is called pre-plugin backups.

A restore using pre-plugin backups is more complicated; however, AutoUpgrade eases that by exporting the RMAN backup metadata (config file parameter export_rman_backup_for_noncdb_to_pdb).

I suggest that you:

- Start a backup immediately after the upgrade, so you don’t have to use pre-plugin backups.

- Practice restoring with pre-plugin backups.

Transparent Data Encryption (TDE)

AutoUpgrade fully supports upgrading an encrypted database. I can still use the above procedure with a few changes.

-

You’ll need to input the non-CDB database keystore password into the AutoUpgrade keystore. You can find the details in a previous blog post.

-

In the container database, AutoUpgrade always adds the database encryption keys to the unified keystore. After the conversion, you can switch to an isolated keystore.

-

At one point, you must copy the keystore to the standby database, and restart it. Check the MOS note KB117147 for additional details.

Other Config File Parameters

The config file shown above is a basic one. Let me address some of the additional parameters you can use.

-

timezone_upg: AutoUpgrade upgrades the database time zone file after the actual upgrade. This requires an additional restart of the database and might take significant time if you have lots ofTIMESTAMP WITH TIME ZONEdata. If so, you can postpone the time zone file upgrade or perform it in a more time-efficient manner. -

before_action/after_action: Extend AutoUpgrade with your own functionality by using scripts before or after the job.