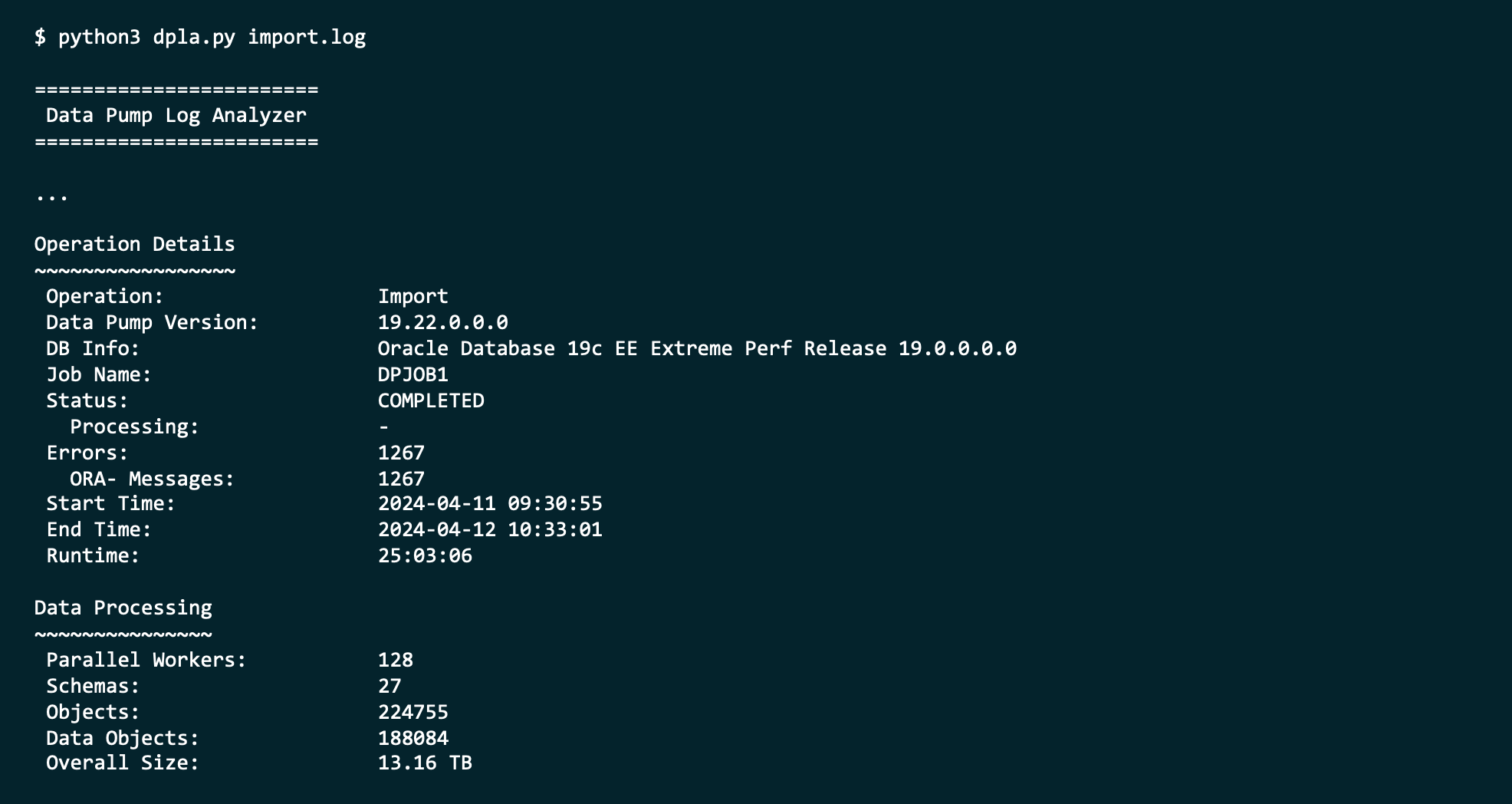

A colleague was helping a customer optimize an import using Data Pump via a database link that involved SecureFile LOBs.

Do you see a way to parallelize the direct import to improve performance and thus shorten the time it takes to import? Or is it not possible for LOB data?

Network mode imports are a flexible way of importing your data when you have limited access to the source system. However, it comes with the price of restrictions. One of them being:

- Network mode import does not use parallel query (PQ) child processes.

In Data Pump, one worker will process a table data object which is either a:

- Table

- Table partition

- Table subpartition

So, for a regular table, this means just one worker is processing the table and it doesn’t use parallel query. That’s bound to be slow for larger data sets, but can you do something?

Starting Point

To illustrate my point, I’ll use a sample data set consisting of:

- One schema (BLOBLOAD)

- With one table (TAB1)

- Containing two columns

- Number (ID)

- BLOB (BLOB_DATA)

- The table has around 16.000 rows

- Size is 50 GB

Doing a regular Data Pump import over a database link is slow because there’s only one worker and no parallel query:

impdp ... \

network_link=srclnk \

schemas=blobload \

parallel=4

...

21-OCT-24 05:30:36.813: Job "SYSTEM"."SYS_IMPORT_SCHEMA_01" successfully completed at Mon Oct 21 05:30:36 2024 elapsed 0 00:11:50

Almost 12 minutes!

Partitioning

Since we know that multiple workers can process different partitions of the same table, let’s try to partition the source table. I’ll use hash partitioning and ensure my partitions are equally distributed:

alter table tab1

modify partition by hash (id)

partitions 32 online;

Repeat the import:

impdp ... \

network_link=srclnk \

schemas=blobload \

parallel=4

...

21-OCT-24 09:08:00.897: Job "SYSTEM"."SYS_IMPORT_SCHEMA_01" successfully completed at Mon Oct 21 09:08:00 2024 elapsed 0 00:04:26

Just 4m 26s – that’s a huge improvement!

In the log, file you’ll see that multiple workers are processing partitions individually. So, even without parallel query, I get parallelism because of multiple workers on the same table – each on different partitions.

But partitioning is a separately licensed option.

Using QUERY Parameter and Multiple Data Pump Imports

I’ve previously blocked about do-it-yourself parallelism for Data Pump exports of BasicFile LOBs. Can I use the same approach here?

The idea is to start multiple Data Pump jobs importing the same table, but each working on a subset of the data.

- First, import just the metadata

impdp ... \ network_link=srclnk \ schemas=blobload \ content=metadata_only - Next, start 4 concurrent imports importing just the rows. Each import works on a subset of the data using thery

queryparameter:impdp ... \ network_link=srclnk \ schemas=blobload \ content=data_only \ query="where mod(id, 4)=0" impdp ... \ network_link=srclnk \ schemas=blobload \ content=data_only \ query="where mod(id, 4)=1" impdp ... \ network_link=srclnk \ schemas=blobload \ content=data_only \ query="where mod(id, 4)=2" impdp ... \ network_link=srclnk \ schemas=blobload \ content=data_only \ query="where mod(id, 4)=3"

No – that’s not possible. During imports, Data Pump acquires a lock on the table being imported using the APPEND hint. This is from a trace of the imports:

INSERT /*+ APPEND NESTED_TABLE_SET_REFS PARALLEL(KUT$,1) */ INTO "BLOBLOAD"."TAB1" KUT$ ("ID", "BLOB_DATA")

SELECT /*+ NESTED_TABLE_GET_REFS PARALLEL(KU$,1) */ "ID", "BLOB_DATA" FROM "BLOBLOAD"."TAB1"@srclnk KU$ WHERE mod(id, 4)=1

If you try to start multiple imports into the same table, you get an error:

ORA-02049: timeout: distributed transaction waiting for lock

So, let’s prevent that by adding data_options=disable_append_hint to each Data Pump import jobs.

Now, multiple Data Pump jobs may work on the same table, but it doesn’t scale lineary.

- One concurrent job: Around 12 minutes

- Four concurrent jobs: Around 8 minutes

- Eight concurrent jobs: Around 7 minutes

It gives a performance benefit, but probably not as much as you’d like.

Two-Step Import

If I can’t import into the same table, how about starting four simultaneous Data Pump jobs using the do-it-yourself approach above, but importing into separate tables and then combining all the tables afterward?

I’ll start by loading 1/4 of the rows (notice the QUERY parameter):

impdp ... \

network_link=srclnk \

schemas=blobload \

query=\(blobload.tab1:\"WHERE mod\(id, 4\)=0\"\)

While that runs, I’ll start three separate Data Pump jobs that each work on a different 1/4 of the data. I’m remapping the table into a new table to avoid the locking issue:

impdp ... \

network_link=srclnk \

schemas=blobload \

include=table \

remap_table=tab1:tab1_2 \

query=\(blobload.tab1:\"WHERE mod\(id, 4\)=1\"\)

In the remaining two jobs, I’ll slightly modify the QUERY and REMAP_TABLE parameters:

impdp ... \

network_link=srclnk \

schemas=blobload \

include=table \

remap_table=tab1:tab1_3 \

query=\(blobload.tab1:\"WHERE mod\(id, 4\)=2\"\)

impdp ... \

network_link=srclnk \

schemas=blobload \

include=table \

remap_table=tab1:tab1_4 \

query=\(blobload.tab1:\"WHERE mod\(id, 4\)=3\"\)

Now, I can load the rows from the three staging tables into the real one:

ALTER SESSION FORCE PARALLEL DML;

INSERT /*+ APPEND PARALLEL(a) */ INTO "BLOBLOAD"."TAB1" a

SELECT /*+ PARALLEL(b) */ * FROM "BLOBLOAD"."TAB1_2" b;

commit;

INSERT /*+ APPEND PARALLEL(a) */ INTO "BLOBLOAD"."TAB1" a

SELECT /*+ PARALLEL(b) */ * FROM "BLOBLOAD"."TAB1_3" b;

commit;

INSERT /*+ APPEND PARALLEL(a) */ INTO "BLOBLOAD"."TAB1" a

SELECT /*+ PARALLEL(b) */ * FROM "BLOBLOAD"."TAB1_4" b;

commit;

This approach took around 7 minutes (3m 30s for the Data Pump jobs, and 3m 30s to load the rows into the real table). Slower than partitioning but still faster than the starting point.

This approach is complicated; the more data you have, the more you need to consider things like transaction size and index maintenance.

Conclusion

Network mode imports have many restrictions, which also affect performance. Partitioning is the easiest and fastest improvement, but it requires the appropriate license option. The final resort is to perform some complicated data juggling.

Alternatively, abandon network mode imports and use dump files. In dump file mode, one worker can use parallel query during export and import, which is also fast.

Thanks

I used an example from oracle-base.com to generate the test data.