Logical migration has many benefits but there is also a catch. It does not transfer some of that nice meta data that make your life easier – such as:

- AWR

- SQL Plan Baselines

- SQL Profiles

Further, we can make a few tweaks to avoid having performance problems after the migration. This post applies to migrations into any database – except Autonomous Databases. In an Autonomous Database you don’t need to worry about such things. The database will fix the issues for you.

AWR

If you are licensed to use AWR in your source database, you can transport the AWR data into your target database.

- In your source database, execute the script

$ORACLE_HOME/rdbms/admin/awrextr.sql. It extracts the AWR data and load them into a Data Pump dump file. - Transfer the dump file to your target database.

- In your target database, import the AWR data using the script

$ORACLE_HOME/rdbms/admin/awrload.sql.

When you look at the old AWR data in your target database, use it with caution. The AWR data was collected on a completely different system (CPU, memory, settings, database release, initialization parameters etc). Your new system is very different. Keep that in mind when you compare data from before and after the migration.

SQL Plan Baselines

If you are using SQL Plan Management to ensure plan stability, you want to transport your baselines as well.

First, in my source database create a staging table. It is used to transport the baselines. Create the staging table in a schema that you will be migrating.

begin

dbms_spm.create_stgtab_baseline (

table_name => 'MIGR_SPB_1',

table_owner => 'SH');

end;

/

Next, I will extract all the baselines including the fixed or accepted plans. It is now stored in the staging table and will be migrated together with my data:

declare

l_count number;

begin

l_count := dbms_spm.pack_stgtab_baseline (

table_name => 'MIGR_SPB_1',

table_owner => 'SH',

enabled => 'YES',

fixed => 'YES');

end;

/

After the migration, in my target database, I can now extract the baselines into the SQL Management Base:

declare

l_count number;

begin

l_count := dbms_spm.unpack_stgtab_baseline (

table_name => 'MIGR_SPB_1',

table_owner => 'SH');

end;

/

That’s it. Now SQL Plan Management will ensure that the same plans are used for the statements that are in the baselines.

If you are not using SQL Plan Management, you should look into it. In my opinion it is one of the most underrated features in the Oracle Database. Many issues are caused by plan changes which is exactly what you can avoid with SQL Plan Management.

SQL Profiles

If you have SQL Profiles you can transport them in a way very similar to transporting SQL Plan Baselines.

First, in the source database, create a staging table in a schema that you will be migrating:

begin

dbms_sqltune.create_stgtab_sqlprof (

table_name => 'MIGR_SQLP_1',

table_owner => 'SH');

end;

/

Next, put the SQL Profiles into the staging table. This example will load all profiles from the DEFAULT category, but there are options to cherry-pick those of interest:

begin

dbms_sqltune.pack_stgtab_sqlprof (

staging_table_name => 'MIGR_SQLP_1',

staging_schema_owner => 'SH');

end;

/

Finally, in my target database, I can unpack the profiles from the staging table and into the data dictionary:

begin

dbms_sqltune.unpack_stgtab_sqlprof (

staging_table_name => 'MIGR_SQLP_1',

staging_schema_owner => 'SH',

replace => TRUE);

end;

/

Statistics

Should you do anything about object statistics?

- If you have migrated into the same release, the answer is no.

- If you have migrated into a higher release, the answer is it depends. It is not required, but if you come from a very old release, you might consider it.

Imagine a migration from 11.2.0.4 to 19c. Significant changes have been made to histograms in that period. If you want to benefit from the new, improved histogram types, you should re-gather statistics. If you don’t do it, the histograms will gradually change over time when the statistics become stale. Some people prefer taking such a change immediately because they don’t like the idea of things happening over the course of time.

If you want to refresh statistics:

exec dbms_stats.delete_database_stats;

exec dbms_stats.gather_database_stats;

To make it a little faster:

exec dbms_stats.set_global_prefs('CONCURRENT','AUTOMATIC');

To make it even faster – requires CPU:

exec dbms_stats.set_global_prefs('DEGREE', DBMS_STATS.AUTO_DEGREE);

To make it as fast as possible – requires a lot of CPU:

exec dbms_stats.set_global_prefs('CONCURRENT','AUTOMATIC');

exec dbms_stats.set_global_prefs('DEGREE', DBMS_STATS.AUTO_DEGREE);

Be sure to set the preferences back to the original value when you are done gathering statistics. Nigel Bayliss has an interesting blog post on How to gather optimizer statistics fast.

Dictionary Statistics

After the migration, the target database has many more tables, indexes, views and so on. This is represented in the data dictionary as additional rows in e.g. OBJ$. All that extra data probably means that the dictionary statistics are stale. Stale dictionary statistics can lead to all sorts of troubles.

Immediately after the migration is completed you should re-gather dictionary statistics to avoid problems caused by stale statistics. Do this right after ZDM has completed the migration:

exec dbms_stats.gather_dictionary_stats;

Or even better:

begin

dbms_stats.gather_schema_stats('SYS');

dbms_stats.gather_schema_stats('SYSTEM');

end;

/

I prefer gathering statistics on SYS and SYSTEM independently. My colleagues and I have seen issues from time to time with dbms_stats.gather_dictionary_stats.

Fixed Object Statistics

After migration and once the system has been properly warmed up, you should re-gather fixed objects statistics. It is important that you don’t gather fixed objects statistics right after migration. You must until you have run a representative workload. If you wait a few days or until the next weekend, then you are most likely on the safe side:

exec dbms_stats.gather_fixed_objects_stats;

This recommendation is similar to the one we give about after upgrade. Read the blog post to read more about fixed objects statistics in general. Also, you can find a very useful piece of code that gathers fixed objects statistics in seven days via a scheduler job.

SQL Tuning Sets

This is something that you should do before you start the migration. Capture your workload from the source database into SQL Tuning Sets.

What is a SQL Tuning Set? It is an object that contains information about a number of SQLs. For each SQL the following is collected:

- SQL Statement – the SQL text itself

- Context – executing schema, binds, and environment (like NLS settings)

- Statistics – execution statistics like elapsed time, CPU time, buffer gets, rows processed

- Plan – last, but not least, the actual execution plan

You can then transport the SQL Tuning Set into the target database. If an SQL is now running slow, you can use the information from the SQL Tuning Set to identify why. For an SQL you can see the old and new plan, compare amount of buffer gets and CPU time and so forth. You can also bring back the old plan, if the new plan is less effective.

First, in the source you create a SQL Tuning Set:

exec dbms_sqlset.create_sqlset (sqlset_name => 'MIGR_STS_1', description => 'For migration - from source');

Next, capture statements from AWR. This will take the top 5000 statements from your entire AWR ordered by elapsed time:

declare

begin_id number;

end_id number;

cur sys_refcursor;

begin

select min(snap_id), max(snap_id) int begin_id, end_id

from dba_hist_snapshot;

open cur for

select value(p) from table(dbms_sqltune.select_workload_repository(

begin_snap => begin_id,

end_snap => end_id,

basic_filter => 'parsing_schema_name not in (''SYS'')',

ranking_measure1 => 'elapsed_time',

result_limit => 5000,

attribute_list => 'ALL')) p;

dbms_sqltune.load_sqlset('MIGR_STS_1', cur);

close cur;

end;

/

You can also sample directly from cursor cache. Start the sampling and then run your workload. This example will sample every minute for 15 minutes:

begin

dbms_sqlset.capture_cursor_cache_sqlset(

sqlset_name => 'MIGR_STS_1',

time_limit => 900,

repeat_interval => 60,

capture_option => 'MERGE',

capture_mode => DBMS_SQLTUNE.MODE_ACCUMULATE_STATS,

basic_filter => 'parsing_schema_name not in (''SYS'')',

sqlset_owner => NULL,

recursive_sql => 'HAS_RECURSIVE_SQL');

end;

/

Finally, put all that information into a staging table, so it can be transported to the target database. Put the staging table into a schema that you plan on migrate. This way you ensure that the SQL Tuning Set is migrated together with your data. In my example it is the schema SH:

begin

dbms_sqltune.create_stgtab_sqlset (

schema => 'SH',

table_name => 'MIGR_STGTAB_1');

dbms_sqltune.pack_stgtab_sqlset (

sqlset_name => 'MIGR_STS_1',

staging_schema_owner => 'SH',

staging_table_name => 'MIGR_STGTAB_1');

end;

/

After the migration, in my target database I can now extract the SQL Tuning Set from the staging table and into the data dictionary:

begin

dbms_sqltune.unpack_stgtab_sqlset (

sqlset_name => '%',

replace => true,

staging_schema_owner => 'SH',

staging_table_name => 'MIGR_STGTAB_1'

);

end;

/

Now – if you get into trouble with a misbehaving SQL – you can use the information in the SQL Tuning Set to investigate the problem.

Conclusion

When you perform a logical migration, there are some additional tasks that you should consider at least for your mission-critical databases. These steps will help you avoid performance issues after the migration.

In addition to the topics discussed here, I recommend that you also watch our webinar Performance Stability, Tips and Tricks and Underscores (slides). It has the complete prescription to avoid performance problems after upgrade and migration.

Other Blog Posts in This Series

- Introduction

- Install And Configure ZDM

- Physical Online Migration

- Physical Online Migration to DBCS

- Physical Online Migration to ExaCS

- Physical Online Migration and Testing

- Physical Online Migration of Very Large Databases

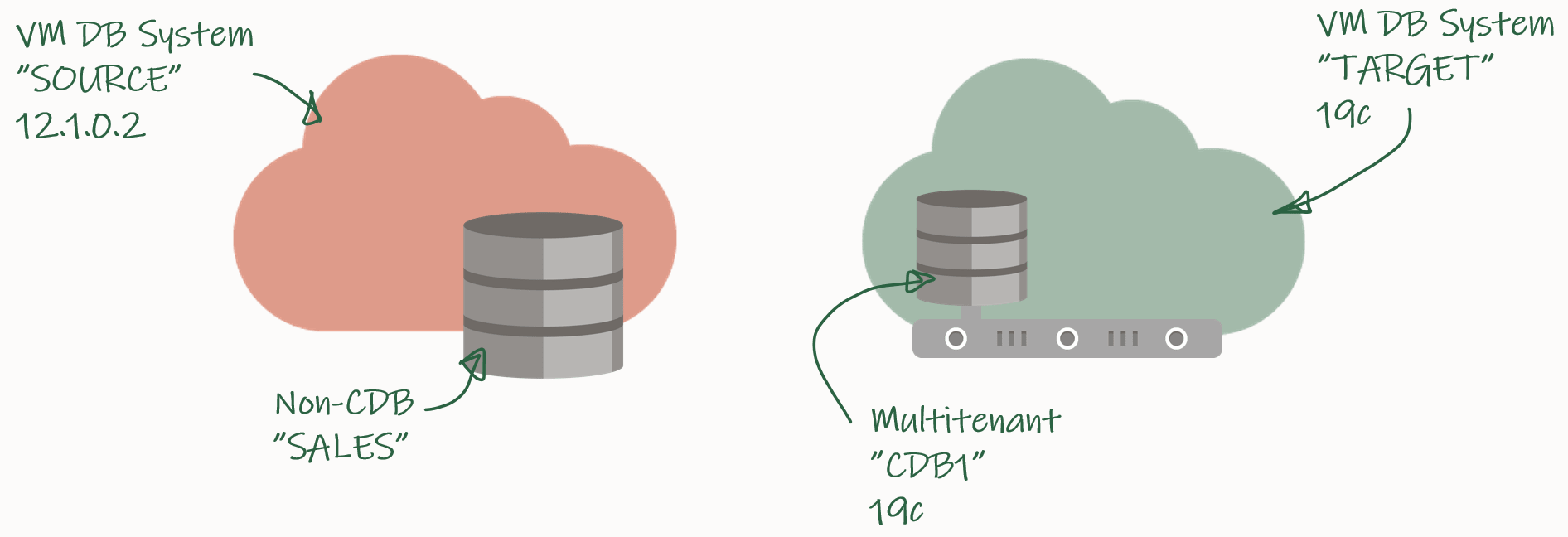

- Logical Online Migration

- Logical Online Migration to DBCS

- Logical Offline Migration to Autonomous Database

- Logical Online Migration and Testing

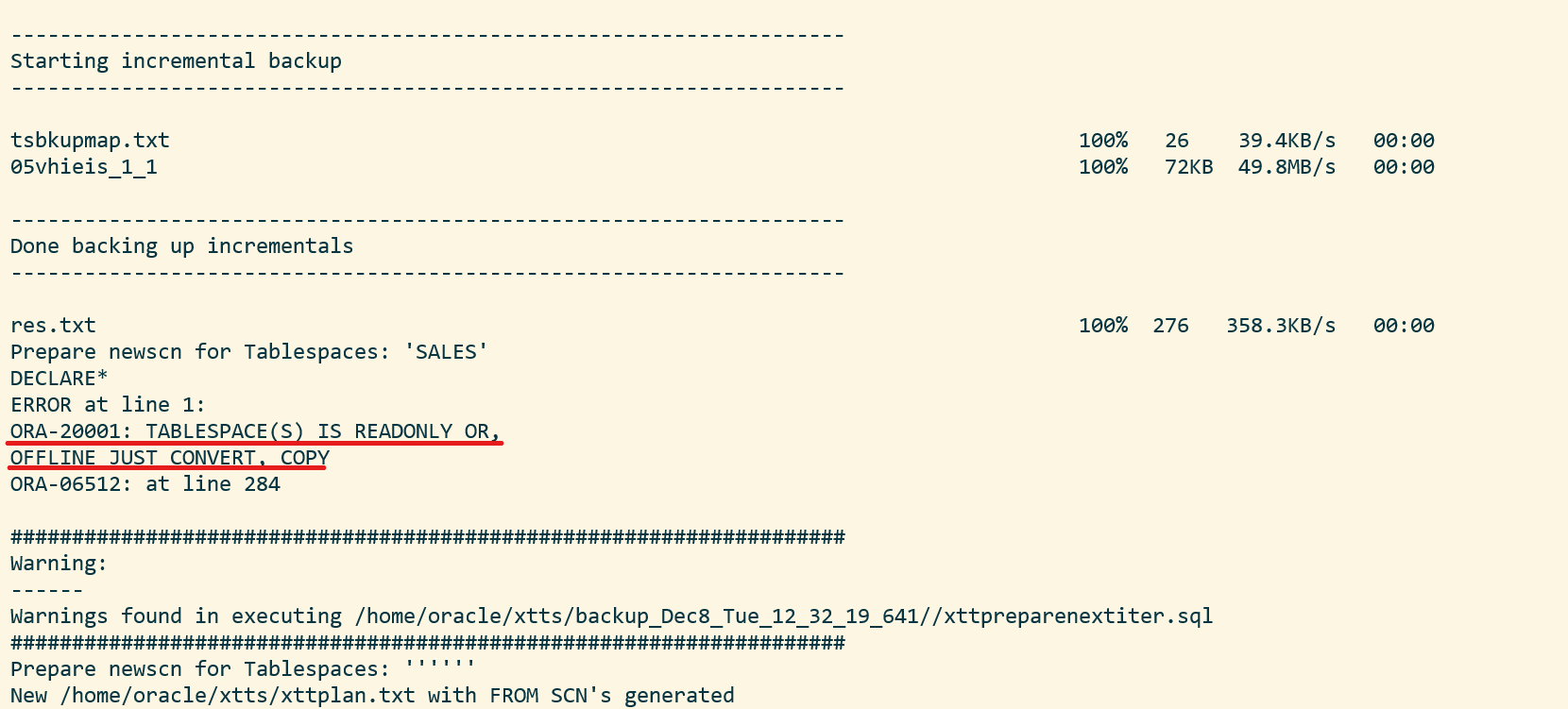

- Logical Online Migration of Very Large Databases

- Logical Online and Sequences

- Logical Migration and Statistics

- Logical Migration and the Final Touches (this post)

- Create GoldenGate Hub

- Monitor GoldenGate Replication

- The Pro Tips

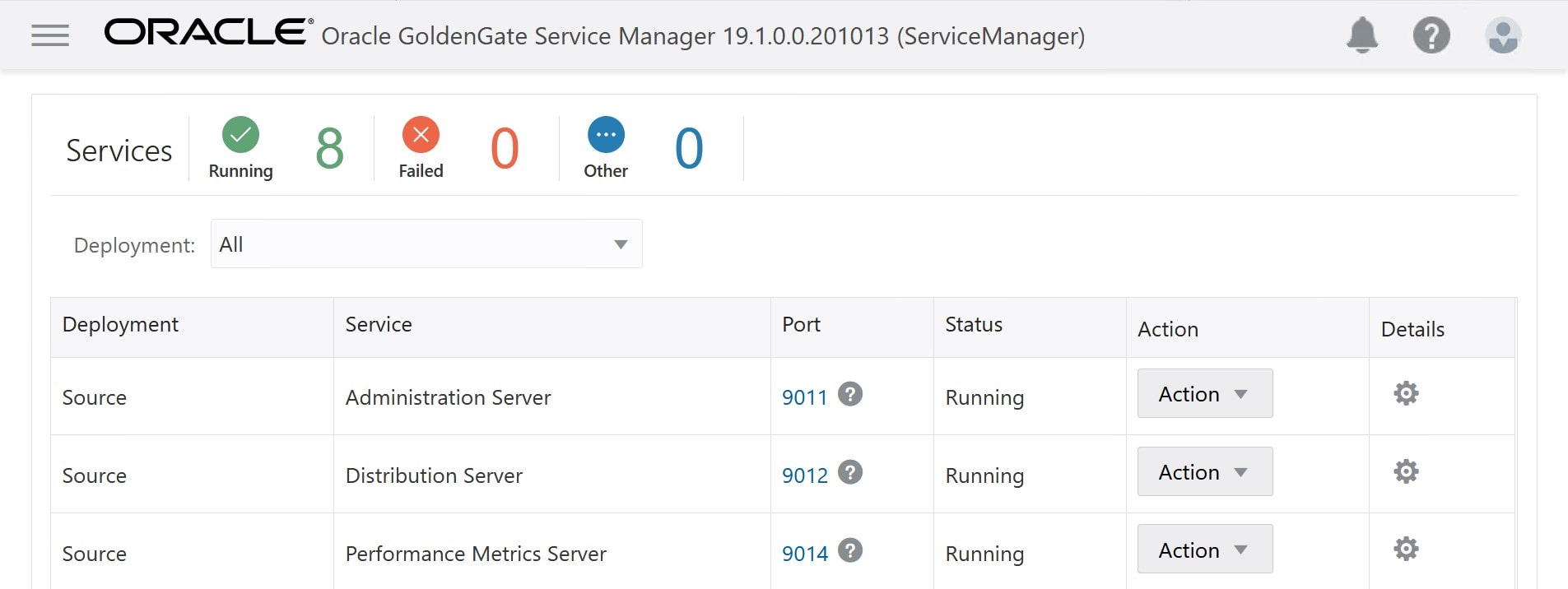

You can start and stop the services from the Action drop-down menu.

You can start and stop the services from the Action drop-down menu.