Back from Oracle AI World in Las Vegas – the conference formerly known as CloudWorld formerly known as OpenWorld. Badge now joins the others on my wall.

Slides / Labs

Here are the slides from our presentations:

- Upgrade to Oracle Database 26ai: Best Practices and Customer Experience

- Mastering Oracle Data Pump: Faster, Smarter, Simpler

- Operational Life Hacks with Oracle AutoUpgrade

- Patch Smarter, Not Harder in Oracle Database 19c and 23ai

- PRE-EVENT: Mastering Oracle 23ai Upgrades – Best Practices and Zero Downtime Techniques

You can try our hands-on labs for free in Oracle LiveLabs:

Impressions

-

The conference included many visionary keynotes, all available on YouTube for further viewing.

-

Release of Oracle AI Database 26ai. Check Mike’s blog post for details.

-

Oracle AI World is special, especially for someone like me who usually works from home. In a few days, I reunited with colleagues, customers, and friends—a very intense social event for someone used to meeting people on Zoom. It’s also the time of the year when I get to spend time with my team.

-

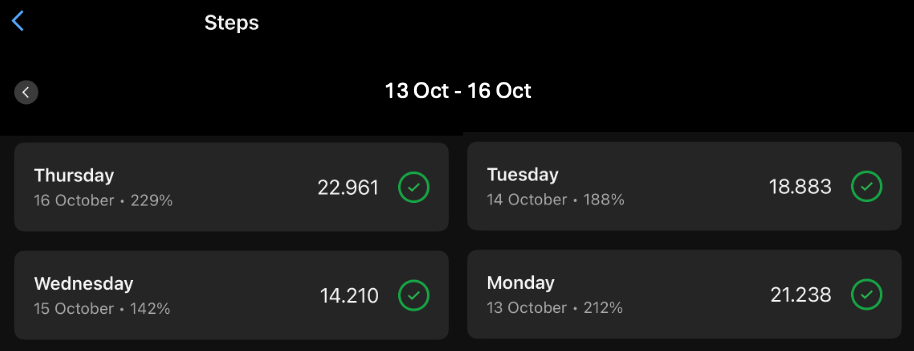

Long days left me with sore feet from extended standing and walking.

-

The motto of the conference: AI is changing everything! I only had time to play with AI generated pictures.

-

Nine-hour time difference usually causes me jetlag, but not this time. I used the Timeshifter app, drank lots of water with electrolytes (no caffeine), got sunshine and darkness at the right times, and took Melatonin (this is not a medical endorsement, always check with your doctor). I recovered quickly. If you suffer from jetlag too, give it a shot.

-

Fruit and a protein bar in my bag served as emergency fuel when meals were delayed—a tip for future conferences.

-

First Air Canada flight—a pleasure. Flew a Boeing Dreamliner, my second favorite after the Airbus A350. As an aviation geek, I love watching planes. What’s your favorite aircraft?

-

While making a layover in Toronto, I saw Canada’s vast autumn forests from above on approach—a magnificent sight. I’d love to visit Canada in autumn. Any recommendations for must-see sights?

-

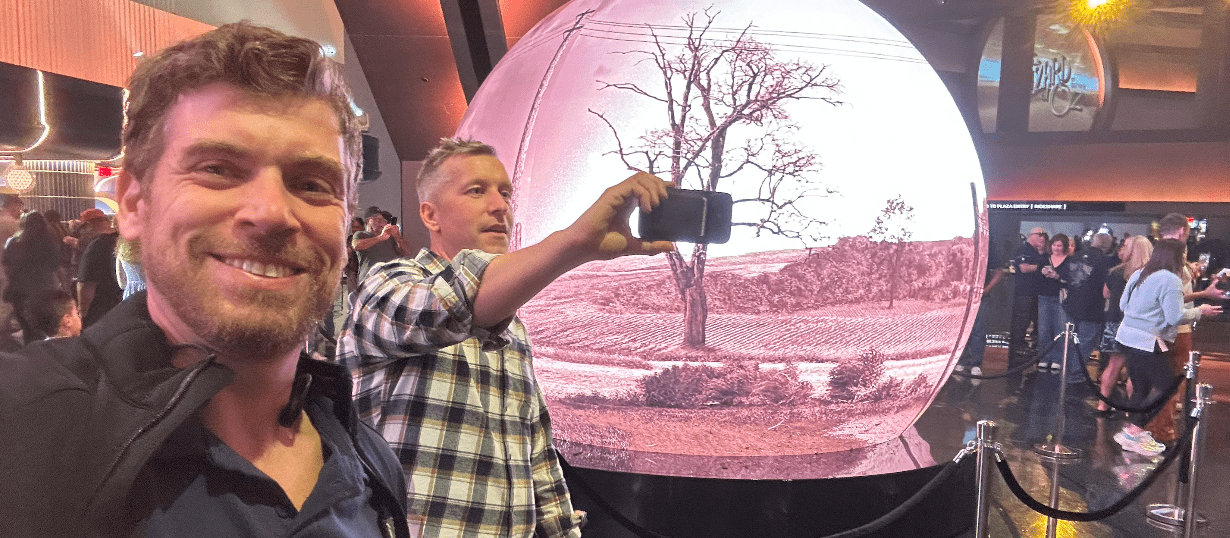

Saw a remake of The Wizard of Oz at The Sphere. What a wild ride—unbelievable. If you visit Las Vegas, catch a show there. That’s a true big screen.

So many people were doing selfies, that we had to make a selfie-with-a-selfie.

So many people were doing selfies, that we had to make a selfie-with-a-selfie.

Job Done

-

That’s it! Celebrated an amazing conference with the team and said our goodbyes.

-

Moving forward, stay tuned for news on the upcoming Oracle AI World Tour 2026—coming soon to a city near you.

-

Next year’s conference returns to The Venetian in late October. Hope to see you there! If you have a story to share, reach out—perhaps you can present it with us.

-

THANKS to everyone that took part in making Oracle AI World a big success!