Exporting data from your production database can be a pain for several reasons:

- You probably want a consistent export so you set the Data Pump parameter FLASHBACK_TIME. That requires a lot of undo space. If there is heavy activity on the database, then you risk running into ORA-01555.

- You want export the data quickly using the parallel options in Data Pump. The quicker you export, the less susceptible you also are to ORA-01555. But parallel uses more resources.

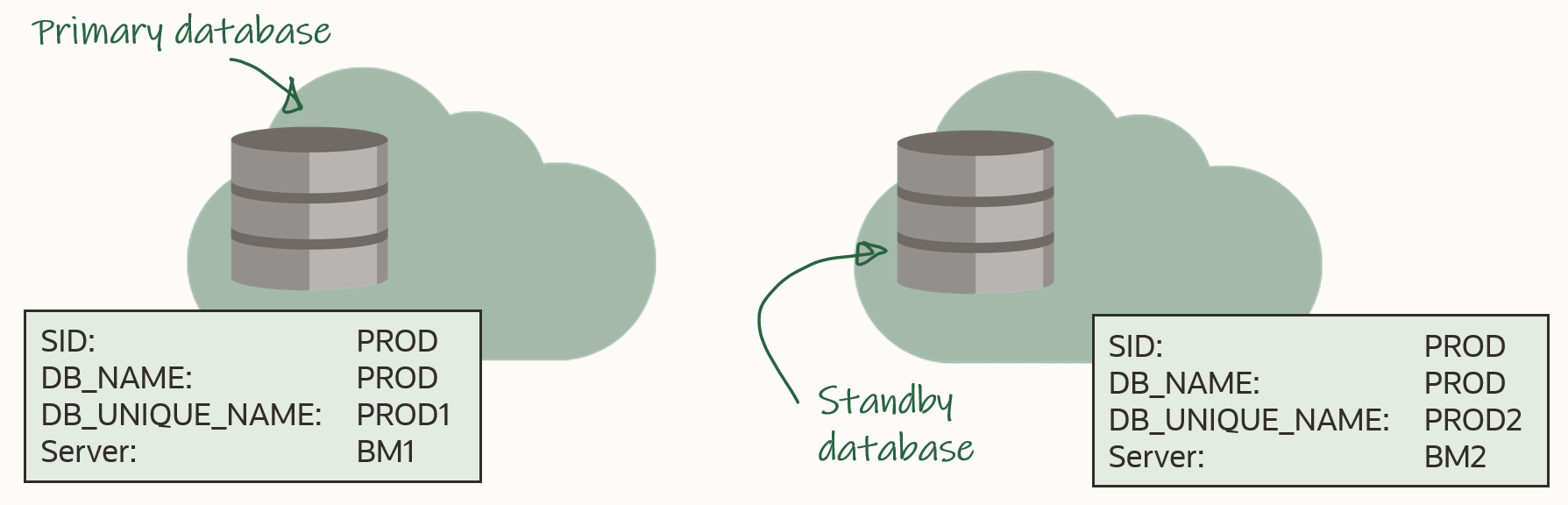

If you have an Active Data Guard, you can export your data from the standby database. It should be straightforward, right? An export is after all just reading data…

But Data Pump is not just a simple export tool. It is quite advanced – and to support all that functionality it does require a master table to be created which can be used to coordinate the operation. Further, it uses Advanced Queueing (AQ) for communicating between the processes. And AQ also requires a writable database.

But how about DML Redirect that was introduced in Oracle Database 19c? Can it be used to handle those few DMLs? No, because creation of the master table is a DDL – not a DML. If you try, you will fail:

$ expdp system@localhost:1521/pdb1 schemas=SH directory=mydir

Export: Release 19.0.0.0.0 - Production on Mon Apr 12 18:19:22 2021

Version 19.10.0.0.0

Copyright (c) 1982, 2019, Oracle and/or its affiliates. All rights reserved.

Password:

Connected to: Oracle Database 19c EE Extreme Perf Release 19.0.0.0.0 - Production

ORA-31626: job does not exist

ORA-31633: unable to create master table "SYSTEM.SYS_EXPORT_SCHEMA_05"

ORA-06512: at "SYS.DBMS_SYS_ERROR", line 95

ORA-06512: at "SYS.KUPV$FT", line 1163

ORA-16000: database or pluggable database open for read-only access

ORA-06512: at "SYS.KUPV$FT", line 1056

ORA-06512: at "SYS.KUPV$FT", line 1044

Solution

I turned on my bat signal and got help from my fellow Product Manager, Pieter Van Puymbroeck.

Photo by Ali Kokab on Unsplash

Photo by Ali Kokab on Unsplash

Use a snapshot standby, he said. Of course – Snapshot Standby is part of Enterprise Edition, so if you have Data Guard, you also have Snapshot Standby. If you need a quick recap on what a snapshot standby database is, you should check out this video.

First, I convert my physical standby database (named CDB19_fra3zt) into a snapshot standby database:

DGMGRL> convert database 'CDB19_fra3zt' to snapshot standby;

Next, create a directory object that can be used with Data Pump:

SQL> create directory mydir as '/tmp';

Finally, start an export. Notice that I haven’t set FLASHBACK_TIME. When exporting from a snapshot standby there are probably no other users on the database, so you don’t need to worry about consistency of the export:

$ expdp system schemas=sales directory=mydir

My data has now been exported, and it is time to convert my snapshot standby database back into a physical standby database to properly protect my precious database:

DGMGRL> convert database 'CDB19_fra3zt' to physical standby;

But

Will this jeopardize my primary database. No, but ….

Your primary database is still protected, because log files are still sent to the standby database, but not applied. What will then happen if I need to switch over or fail over?

- Convert the snapshot standby database back into a physical standby database

- That will implicitly issue a Flashback Database and revert all the changes made

- Then catch up with the primary by applying all the log files

- Finally, complete the switchover or failover

Bottomline, it will take longer! The flashback operation is really fast and should be done within minutes. After all, Data Pump isn’t making that many changes. Only the master table and some AQ stuff. How much time then to do log apply? That depends entirely on how big the apply lag is.

So, if you choose to go down this path be prepared that in the event of a switchover or a failover, it will take a little longer until the standby is open for business. Unless, you have two standby databases, then you will still have run ready to jump in immediately.

GoldenGate

If you need the Data Pump export to perform an initial load for GoldenGate, then you might want to control the SCN at which the export is happening. If so, cancel redo apply on the standby database and roll forward to the desired SCN:

alter database recover managed standby database cancel;

alter database recover managed standby database until change n;

Before converting the standby database to snapshot standby:

alter database convert to snapshot standby;

Later on, when you need to start replication in GoldenGate you can use the following option:

START REPLICAT ATCSN n

It Must Be From Physical Standby

On Twitter I was made aware that AWS RDS does not allow snapshot standby. The user referred to a MOS note describing how to export from a physical standby database. You need a proxy database and a database link. You find the details in How To Use DataPump Export (EXPDP) To Export From Physical Standby Database (Doc ID 1356592.1).

I recommend using this method only as a last resort. It is more cumbersome and you don’t get the same performance as via a snapshot standby database. Using Data Pump over a database link limits certain parallel capabilities.

Conclusion

You can export from your Data Guard, if you convert it temporarily to a snapshot standby. Be prepared that a switchover or failover operation will take longer.

If you want to know more about Data Pump, you should check out our webinar Migration Strategies – Insights, Tips and Secrets.

And finally, speaking of Data Pump, remember to get the Data Pump bundle patch (bug 32551008) as Roy mentioned on Twitter.