Following a previous blog post here are all the details on automated upgrades in OCI, and (possibly) the answers to your questions.

Precheck

The precheck ensures the database is ready to upgrade. It uses DBUA which again uses preupgrade.jar to execute the checks. It is similar to running AutoUpgrade in analyze mode. The check is non-intrusive and can be executed while the database is in use.

Normally, when you use preupgrade.jar we always recommend you download the latest version from My Oracle Support. However, this is not possible when you use the tooling. The new, target Oracle Home is always deployed as part of the precheck process – and deleted again after the precheck. There is no way you can replace the preupgrade.jar package. You must use the version of preupgrade.jar that comes with the Oracle Home.

If there are no issues that prevent you from upgrading, you will see this message:

However, it could also be that there is an error in the database that must be fixed:

If you want to see the output from the precheck you must log on to the database host and find the file:

vi $ORACLE_BASE/cfgtoollogs/dbua/upgrade<timestamp>/$ORACLE_UNQNAME/upgrade.xml

Only the XML output is available, which might be a little hard to read. If you prefer you can also download AutoUpgrade to the server and run it in analyze mode. It can produce a much better output, and it works even if the target Oracle Home is not present. Create a simple config file:

upg1.sid=DB11204

upg1.source_home=/u01/app/oracle/product/11.2.0.4/dbhome_1

upg1.target_version=19

And now start AutoUpgrade in analyze mode:

java -jar autoupgrade.jar -config DB11204.cfg -mode analyze

You can use the preupgrade report to determine which issues prevents the upgrade from starting.

The database must be in ARCHIVELOG mode and the size of your Fast Recovery Area (FRA) must be at least 15G (parameter db_recovery_file_dest_size). As well, you must have 15G of free space on the mount point that hosts the FRA.

Upgrade

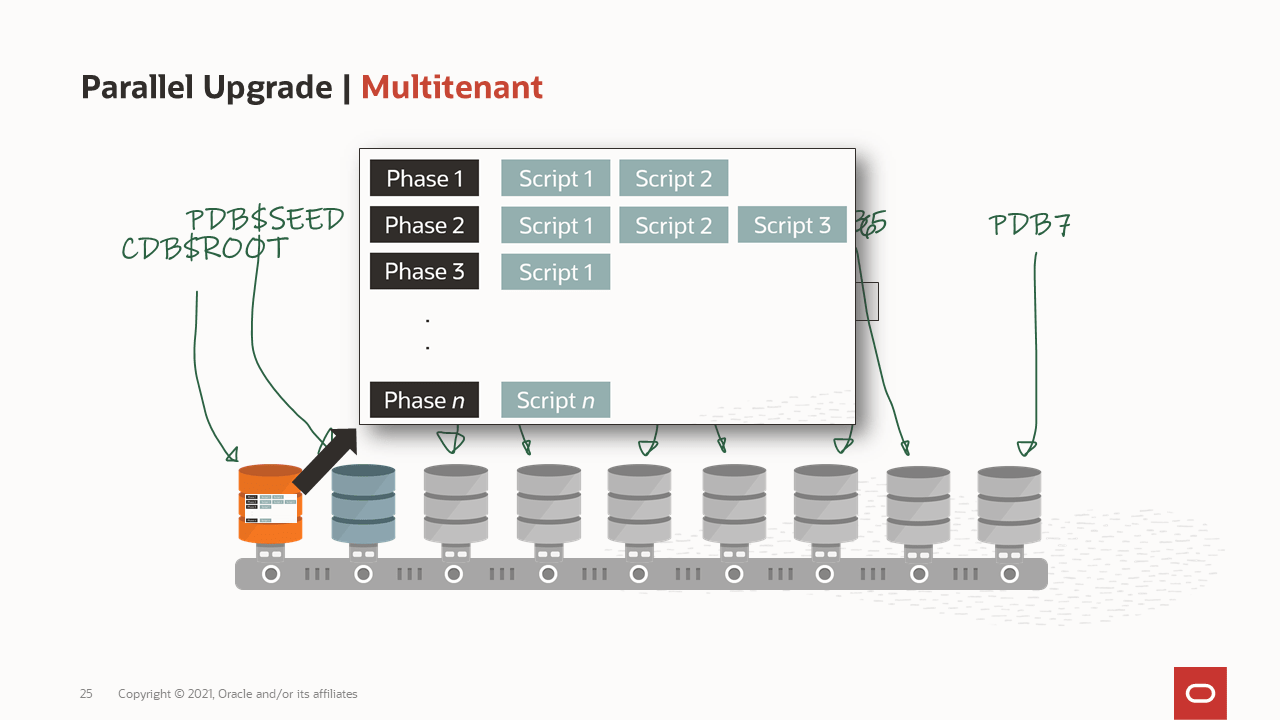

When you upgrade your database, all PDBs in the database are upgraded as well. There is no way to change it. If a PDB is closed when the upgrade starts, it is opened and upgraded.

After the upgrade, the PDB is left opened and in READ WRITE state. But the state is not saved, so after a CDB restart, the PDB will start in whatever state that has been previously saved.

The first version of the tooling does not support standby database. If your database is a primary database, you must remove the standby database, upgrade, and then recreate the standby database. It is in the plan for future enhancements to get this streamlined.

Fallback

Enterprise Edition databases are protected by a guaranteed restore point (GRP) and Flashback Database. The tooling automatically creates the GRP before it starts to work on the database. If an error occurs during the upgrade, you can use the OCI console to initiate a roll back.

After successful upgrade the GRP is dropped again. The GRP only protects the database during the upgrade, so you can’t rely on the GRP as a fallback mechanism if you decide to fall back after the upgrade. Let’s say that your testing reveals a critical problem after the upgrade, then your only fallback mechanism is to restore a backup.

Since Flashback Database is an Enterprise Edition feature, this fallback mechanism is not available on Standard Edition databases.

In addition, it is strongly recommended that you perform a manual backup of the database before you start the upgrade. The console will also give you this warning, before you can start the upgrade.

Monitoring and Troubleshooting

When you have started the upgrade, you can’t monitor it from the console. You must log on to the host. When you do so, be aware that the timestamps shown in the OCI console are UTC, but the timestamps in the log files on the host is local timestamp (depending on your region).

Using dbcli

Log on as root and use the dbcli tool to monitor the progress. First, list jobs:

[root@host]$ dbcli list-jobs

Which should produce a list like this:

Next, you can get additional information about the job using the ID:

Next, you can get additional information about the job using the ID:

[root@host]$ dbcli describe-job -i <id>

Which give you more details:

Using DBUA Log Files

But you can get even better information by looking in the log files from DBUA. Use the job id from the dbcli command to find the log file:

[oracle@host]$ export ORACLE_BASE=/u01/app/oracle

[oracle@host]$ export DBCLI_JOBID=f4b2597f-990f-4442-a774-153f3713fb7a

[oracle@host]$ tail -f -n 10 $ORACLE_BASE/cfgtoollogs/dbua/$DBCLI_JOBID/silent.log

And for really detailed information look in this directory:

[oracle@host]$ export ORACLE_BASE=/u01/app/oracle

[oracle@host]$ export DBCLI_JOBID=f4b2597f-990f-4442-a774-153f3713fb7a

[oracle@host]$ cd $ORACLE_BASE/cfgtoollogs/dbua/$DBCLI_JOBID/$ORACLE_UNQNAME

Using DCS Agent

The OCI control plane communicates with your DB System using an agent, and sometimes it can be useful to look in those logs:

[root@host]$ cd /opt/oracle/dcs/log

[root@host]$ vi dcs-agent.log

To find the log entries that are related to a specific upgrade search for the job ID:

[root@host]$ cat dcs-agent.log | grep "<job-id>" | more

Q&A

Which version and release update can I upgrade to?

The tooling only allows upgrades to Database 19c. If you need to upgrade to any other version, you must do it manually.

You can decide to upgrade to an Oracle provided image or a custom image:

However, for both type of images, the Release Update (or patch level) must be the latest or previous two Release Updates. Even if you have a custom database software image that is older, it can’t be used. You must upgrade to one of the recent Release Updates.

If you select 19.0.0.0 you will not get the base release, but the latest Release Update. If you use the APIs this is a smart way of specifying that you always want the latest Release Update.

However, for both type of images, the Release Update (or patch level) must be the latest or previous two Release Updates. Even if you have a custom database software image that is older, it can’t be used. You must upgrade to one of the recent Release Updates.

If you select 19.0.0.0 you will not get the base release, but the latest Release Update. If you use the APIs this is a smart way of specifying that you always want the latest Release Update.

Where are my log files?

The output from the precheck is stored here:

- $ORACLE_BASE/cfgtoollogs/dbua/upgrade<timestamp>

The output from the actual upgrade is stored here:

- $ORACLE_BASE/cfgtoollogs/dbua/<job-id>

- $ORACLE_BASE/cfgtoollogs/dbua/<job-id>/$ORACLE_UNQNAME

In addition, you can get details about the upgrade using dbcli:

[root@host]$ dbcli list-jobs

[root@host]$ dbcli describe-job -i <job-id>

Why is it taking so long to perform a precheck?

It consists of three phases:

- Deploy new Oracle Home to the VM DB System

- Precheck of the database

- Removing the new Oracle Home

The precheck (phase 2) is really fast. Just as fast as if you would run AutoUpgrade in analyze mode or using preupgrade.jar. The extra time is needed to deploy and remove the Oracle Home again. For each execution of the precheck the procedure repeats, and a new Oracle Home is deployed. It is never re-used.

Why is the upgrade slower than if I do it manually?

Typically, when you upgrade a database you have already – outside of the maintenance window – deployed a new Oracle Home. When you use the tooling, this happens inside the maintenance window. The tooling can’t deploy an Oracle Home prior to the upgrade. In addition, the upgrade is executed with DBUA using default options, which for instance means that the time zone file is upgraded as well.

If you are sensitive to downtime and would like to complete the upgrade faster, you must perform the upgrade manually.

Will my 11.2.0.4 database get converted to a PDB?

No, the database is upgraded as-is and there is no PDB conversion. We are working on making it possible to perform the non-CDB conversion as well. If you must convert the non-CDB to a PDB, you must move the database to a new VM DB System that already have a CDB provision. In that case, I would recommend that you use the manual upgrade and plug-in as described in another blog post.

Can I perform an automated upgrade using dbcli?

No, although the command line help of dbcli suggests that such an option exist, it can’t be used.

Other Posts in This Series

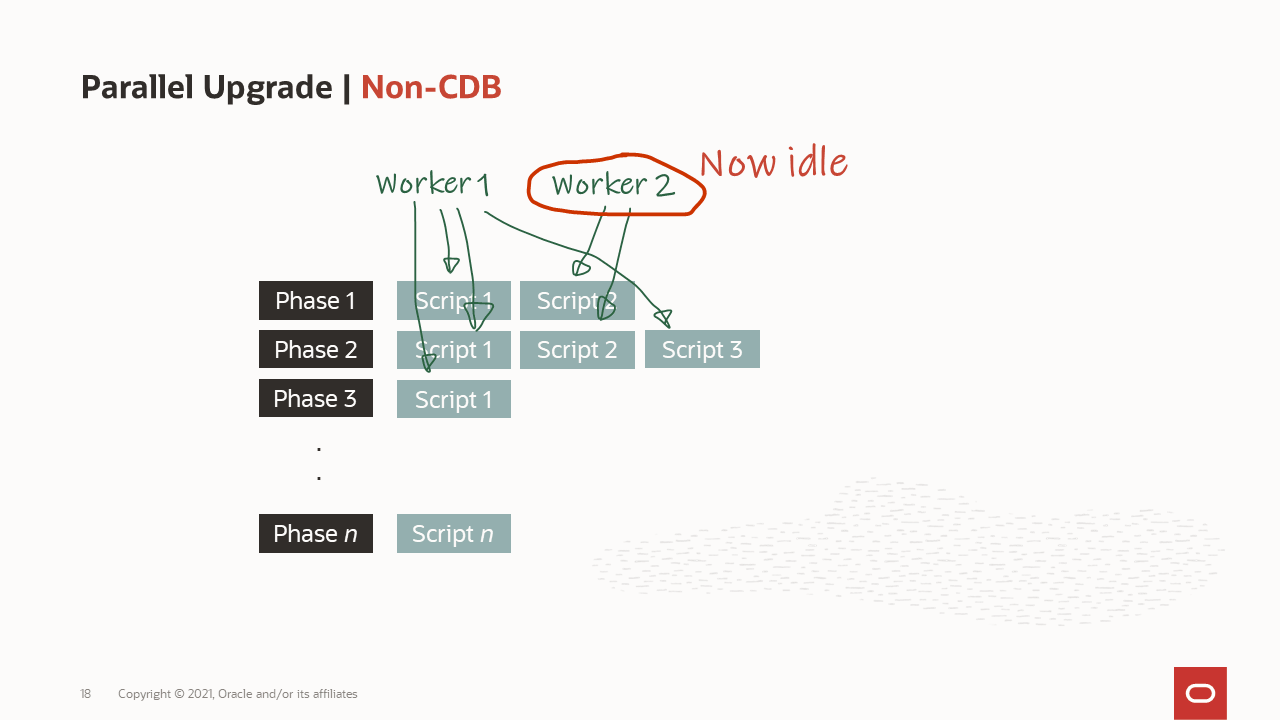

How are the workers processing the upgrade?

How are the workers processing the upgrade? How is a container database upgraded?

How is a container database upgraded? What goes on in the phases of the upgrade?

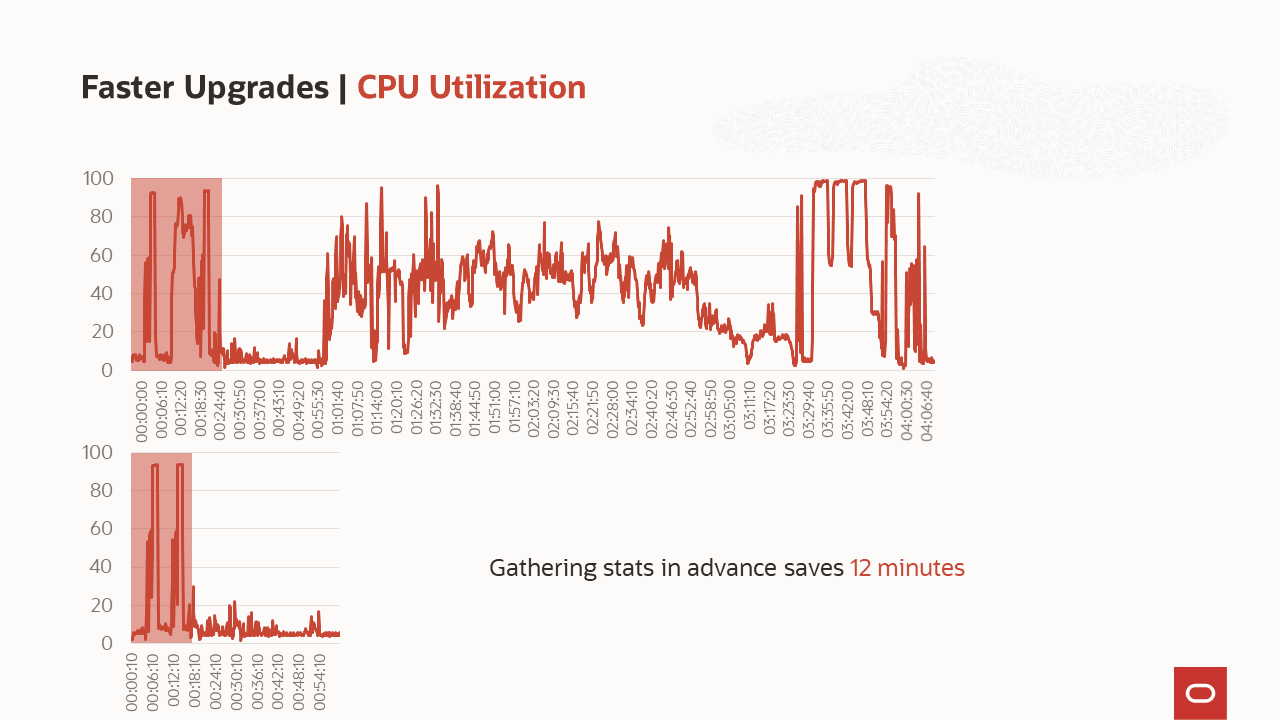

What goes on in the phases of the upgrade? What is the benefit of gathering stats before the upgrade?

What is the benefit of gathering stats before the upgrade? A list of some of the comprehensive checks that are executed by AutoUpgrade

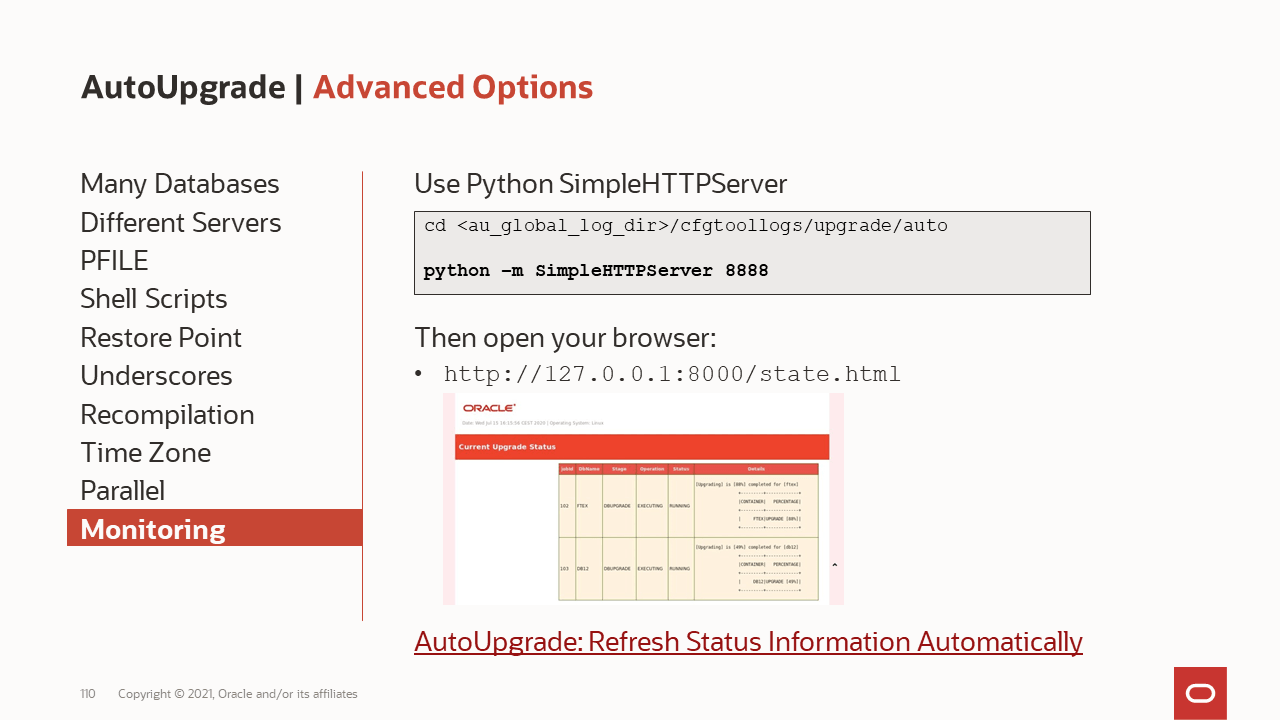

A list of some of the comprehensive checks that are executed by AutoUpgrade One page monitoring

One page monitoring What to consider when doing upgrades from very old releases

What to consider when doing upgrades from very old releases

Next, you can get additional information about the job using the ID:

Next, you can get additional information about the job using the ID:

However, for both type of images, the Release Update (or patch level) must be the latest or previous two Release Updates. Even if you have a custom database software image that is older, it can’t be used. You must upgrade to one of the recent Release Updates.

If you select 19.0.0.0 you will not get the base release, but the latest Release Update. If you use the APIs this is a smart way of specifying that you always want the latest Release Update.

However, for both type of images, the Release Update (or patch level) must be the latest or previous two Release Updates. Even if you have a custom database software image that is older, it can’t be used. You must upgrade to one of the recent Release Updates.

If you select 19.0.0.0 you will not get the base release, but the latest Release Update. If you use the APIs this is a smart way of specifying that you always want the latest Release Update.